Heuristic evaluations are one of the “discount” usability methods introduced over 20 years ago by Jakob Nielsen and Rolf Molich.

Heuristic evaluations are one of the “discount” usability methods introduced over 20 years ago by Jakob Nielsen and Rolf Molich.

In theory, a heuristic evaluation involves having a trained usability expert inspect an interface with compliance to a set of guiding principles (heuristics), such as Nielsen’s 10 heuristics.

In practice, most expert reviews of an interface, including cognitive walkthroughs, are often referred to as heuristic evaluations (HE) even though there are differences.

Despite the reduction in costs that have come with remote and unmoderated usability testing, HE remains a popular method because it:

- finds many of the issues users encounter in a usability test;

- identifies issues that users are less likely to encounter in a usability test, such as more advanced or less frequently used areas; and

- can often be conducted in a fraction of the time of a traditional usability test.

The Evaluator Effect

We’ve written about HE before and one often-overlooked recommendation is to have multiple independent evaluators conduct their own inspections of a website or software. Inspecting an interface involves a great deal of judgement and where there is judgement there is disagreement.

This disagreement has come to be known as the Evaluator Effect and has been well-documented by researchers, including Hertzum and Jacobson. They have found that across a number of Usability Evaluation Methods the agreement between different evaluators is between 5% and 65%.

Some evaluators will be better than others at conducting heuristic evaluations, but that doesn’t mean a strong evaluator will consistently find a high number of usability problems on every UI he/she evaluates.

Much of the data on heuristic evaluations is a bit dated, with most being done on software from the 1990s. To show that these findings are still relevant, we conducted a small experiment on two websites.

We had four evaluators independently evaluate the Enterprise.com and Budget.com websites. We asked the evaluators to narrow their inspections to two tasks: renting a car and finding a rental location (a HE/CW hybrid method). The two tasks were selected from the Comparative Usability Evaluation CUE-8 data.

Find the address of the nearest rental office to the Hilton Hotel located at 921 SW Sixth Avenue, Portland, Oregon, United States 97204.Task 2: Rent a Car

Assume you are planning an upcoming trip to Boston. Rent a car using the following information:

Location: Logan Airport, Boston MA

Rental Period : Fri April, 13th 2012 at 11.00am to Sunday April 15th at 5.00pm.

Class: Intermediate (no SUVs)

Extras: GPS Navigation and Car Seat for a 2-Year-old

If asked, use the following identification:

Name: John Smith

Email: john112233@mailinator.com

Phone: 303-555-1212

Credit Card: Visa

The Evaluators

Two of the evaluators each had over 30 years of experience with usability testing and heuristic evaluations. Both are well published experts in usability. Two evaluators were brand-new to usability and had a short introduction to the method. This included walking through a few examples, and having them read and discuss two popular articles on inspection methods—about the same amount of exposure as a university class.

We didn’t give much guidance to the evaluators other than to identify issues and spend as much or as little time as they needed/had to uncover usability issues in the websites. We also didn’t require the participants to follow any specific heuristics so (strictly speaking) this was more of an expert review than heuristic evaluation, which is similar to the applied practice of HE as seen in the CUE studies.

Results

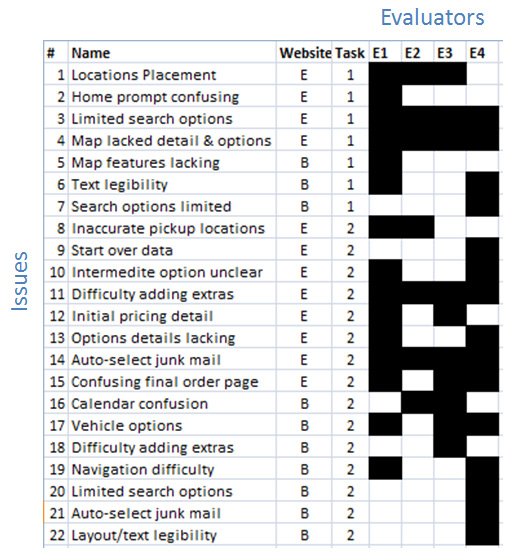

In total, there were 22 unique issues found from the four evaluators. The evaluator-by-issue matrix below shows the total number of problems on the y-axis and which evaluator found which problem on the x-axis.

Figure 1: An evaluator-by-problem matrix: Website Column (E = Enterprise and B= Budget); Task Column (1 = Find Location, 2 = Rent a Car); E1 and E2 are the experts, and E3 and E4 the novice evaluators.

Out of the 22 identified usability issues, only four (18%) were found by all the evaluators (issues 3, 4, 11 and 14).

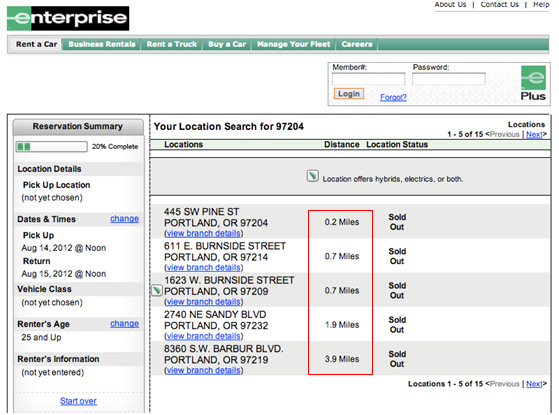

For example, when looking for the nearest Enterprise office, all four evaluators found the same issue with limited search options (issue #3). Evaluators were unable to enter a specific address (the hotel address) to determine which rental location was closest. They were able to find a list of locations in close proximity to a zip code but had to map the locations to the hotel in order to figure out which location was actually closest (see the figure below).

Figure 2: Illustration of issue #3. Searching by address provides only distance by zip code requiring users to map the addresses.

Single Evaluator Issues

As is common with interface inspections, many issues are found by only a single evaluator. In this case, eight issues (36%) were found by only one evaluator—or twice as many were found by only a single evaluator than all four evaluators.

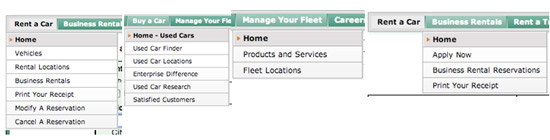

For example, after attempting to perform Task 1 on the Enterprise website then trying to start a new search, Evaluator 1, one of the experts, had an issue with the “Home” prompt on the website (issue # 2). Four different drop-down menus—Rent a Car, Business Rentals, Buy a Car, and Manage Your Fleet—all have a “Home” option but each takes you to a different landing page and not a general “home” page for Enterprise.

Figure 3: Illustration of issue #2. Only one evaluator discovered four “Home” links which took users to different homepages across four menus as confusing.

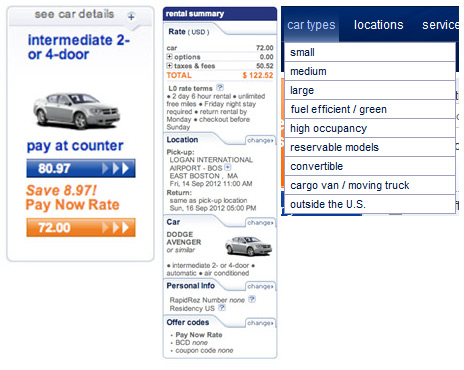

Not all problems were encountered on Enterprise. Using the Budget website and attempting Task 2, three evaluators had issues with vehicle options. Three evaluators, one expert and both novices, were unable to specify “intermediate non-SUV” for vehicle preference. The drop-down menu for “car types” doesn’t indicate an “intermediate” option at all—for SUV or non-SUV—because the menu uses the phrase “fuel efficient/green” instead of the “intermediate” term evaluators were asked to select.

Figure 4: Illustration of issue #17. Three evaluators had a problem specifiying that they wanted an “intermediate” vehicle that wasn’t an SUV.

Novices Detect Issues Experts Miss

In examining the discovered issues, even issues identified by the novices appear to be legitimate usability issues.

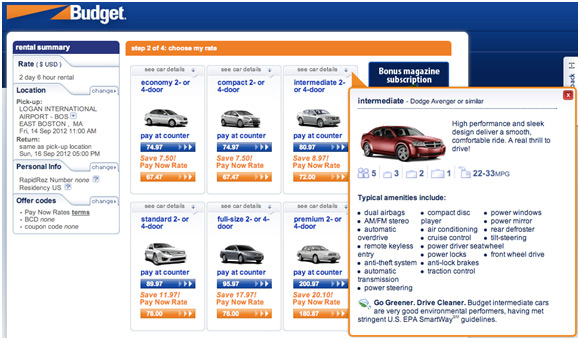

For example, while attempting Task 2 on the Budget site, only one evaluator (a novice) had difficulty adding the extra features to the car rental (issue #18). The evaluator had clicked on “see car details” on the rental car, assuming the available upgrade options would be listed with the car details. However, the upgrade options are on the next step “choose my options” instead of car details.

Figure 5: Illustration of issue #18. One evaluator expected things like GPS and car seats to be under the car details link.

Discussion and Conclusion

This simple experiment, a replication of many prior experiments form the literature, illustrates several points about expert reviews and heuristic evaluations.

- Different evaluators tend to find different issues: This is consistent with Molich’s experience with the CUE studies. He’s repeatedly said that even when evaluators don’t discover the issue, when pointed out, most agree they are indeed valid issues. For example, evaluators 1 and 2 agreed on 55% of the issues discovered and not discovered. The average agreement between all six combinations of evaluators is 57%, which is on the high side of the range provided by Hertzum and Jacobson. The higher average agreement likely comes from using the same method and a well-defined set of tasks.

- Experts find more issues, but not all issues: The more seasoned evaluators (E1 and E2) found most (59%) of the issues the novices found. However, the novices did find 27% of the 22 issues not uncovered by the experts. A strategy of using diverse evaluators, even those new to HE, can generate more insights.

- It’s best to expend the same resources on multiple evaluators spending less time with an interface than a single evaluator: While not a perfect measure of thoroughness, even the best evaluator found 68% of the total number of issues—an expert and novice found the same percentage in this case.

- Concerns about false alarms: Just because an expert says/predicts something is an issue doesn’t mean a user will actually have trouble with it. One especially wonders about issues that are found by only a single evaluator. Agreement is, of course, not the goal of an expert evaluation and even when there is consensus or near-consensus all experts can be wrong (think weather forecasters). For example, is not having the option to specify “no SUV” really an issue or is it an artifact of the task-scenario we gave the participants?

- Concerns about misses: It’s unclear which problems are missed by having an expert review an interface loosely against some principles.

Heuristic evaluations are relatively cheap and quick to perform if you have access to a few experts. This study shows that even briefly trained inspectors can uncover many of the problems experts would, as well as new issues even experts agree are valid problems. However, many researchers over the decades have been concerned about what data is lost using these faster and cheaper approaches. Is the discount worth the price ?

Using HE in addition to usability testing is our recommendation and something our team does as we have easy access to multiple evaluators. It’s not always possible or in a client’s budget to test users along with inspections. This raises the question: How many problems are missed and how many problems are really false alarms?

This is an issue we’ll take up in the next blog on this important method in user experience research.