Recently Nielsen conducted a study on the reading speeds between the printed book, Kindle and iPad. From 24 users the study concluded that the iPad took about 6.2% longer (p =.06) and Kindle about 10% longer (p <.01) to read than the same story on a printed book.

Recently Nielsen conducted a study on the reading speeds between the printed book, Kindle and iPad. From 24 users the study concluded that the iPad took about 6.2% longer (p =.06) and Kindle about 10% longer (p <.01) to read than the same story on a printed book.

From this data Nielsen concluded “Books Faster than Tablets” and while tablets have improved dramatically over the years, they are still slower than the book (albeit modestly).

Put another way, the data tells us we can be 94% sure a difference as large as 6.2% between the iPad and book isn’t due to chance alone.

Would you think differently about the difference in reading speeds if the result said 96% instead of 94%? John Grohol PsyD did and wrote an article using this study as an example of “bad research.” One of John’s main criticisms is that Nielsen’s statistics don’t back up his claim that books are faster than tablets.

In statistics classes students are taught to conclude something is “statistically significant” if the p-value is less than .05. Under this criteria the Kindle is statistically significant (p =.01) and iPad is not (p=.06).

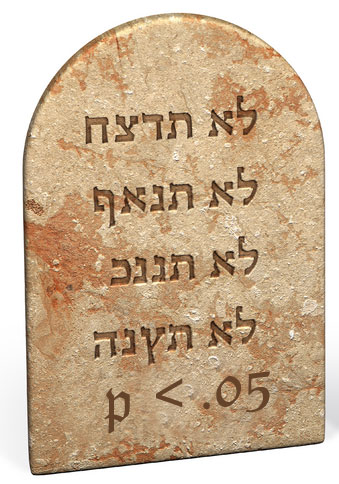

P < .05 is a Convention not a Commandment

Establishing a difference as “statistically significant” when the p-value is less than .05 is a convention. It is a convention that’s been taught for decades and used as a rejection criterion in most peer-reviewed journals. Interestingly enough, there is no mathematical reason why we use .05 instead of say .06. There are some good reasons which include:

- It is a nice round number

- It accounts for around two standard errors in a normal distribution

- It intuitively seems about right

- It comes from a time when we relied on tables of values instead of software

Oh and in case you wondered, there is no mathematical connection between a p of .05 and testing with five users… except that Magic Number 5.

Conventions are helpful because they remove some of the subjectivity that can “stack the data” in a way that favors the author’s hypothesis. Conventions are bad when used as commandments without thought for context. Numbers are objective but interpretation always involves judgment about the context and consequences of being fooled by chance.

- The FDA will approve a new drug p=.06

- Autism is associated with immunizations p =.06

- Reading speed is faster on books than on tablets p=.06

All three examples have the same p-value but the context certainly matters. We require more evidence when money and mortality are involved (the first two are hypothetical p-values based on actual scenarios).

Peer-reviewed Journals

Peer-reviewed journals have a special place in contributing to our scientific knowledge, and rightfully so. But they don’t have a monopoly on ideas, research or inspiration. A problem with the emphasis on p <.05 is it doesn’t account for the magnitude of the difference.

With a large enough sample size almost any difference is statistically different. It’s more interesting to have a difference of 6% at a p=.06 than a difference of 1 % at p =.01 when comparing reading speeds. Only the latter would make it into a peer-review journal.

Applied Research

Being 94% confident chance can’t explain the difference in reading speeds, I’m convinced that people read Ernest Hemingway a little faster on books than tablets. The limitations of this study are more about the type of users and material tested than the p-values—a point also raised by Grohol. Perhaps this finding doesn’t apply to James Joyce, Jim Collins or Japanese readers.

In applied research you’ll never be able to test enough people, explore every possibility, or address all the limitations of your data. Applied research is about making better decisions with data and with limited time and money. Fortunately life and death are rarely consequences of making wrong decisions in applied research.

By all means, conduct your research, summarize it on the web and report your p-values. Tell us what conclusion you drew from the data and see if your readers are convinced. If there is a compelling story it will be replicated or refuted in another web-article. If your p-value is less than .05 it might even make it in a peer-review journal … although fewer people will read it.

For the interested reader see : Statistics as Principled Argument and Beyond ANOVA: Basics of Applied Statistics.