Topics

Topics

Automated Lab Based Usability Testing

Facilitators in usability tests are highly variable. The results of many studies, including the well known Comparative Usability Evaluations (CUEs), have consistently shown that different usability facilitators are inconsistent in how they interact with participants in a usability lab, producing dissimilar results. What’s more, testing more than a few participants a day leads to facilitator

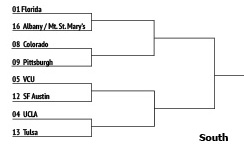

What the NCAA Tournament & Usability Testing Have in Common

It’s that time of year again: March Madness. The Madness in March comes from the NCAA College basketball tournament, with unanticipated winners and losers with dozens of games packed into the final days of March. It’s also the time of year where a lot of people start working directly with probability, whether they know it

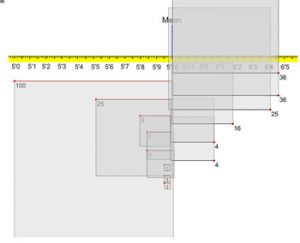

10 Things to Know About Variability in the User Experience

Most people are comfortable with the concept of an average or percentage as a measure of quality. An equally important component of measuring the user experience is to understand variability. Here are 10 things to know about measuring variability in the user experience. Variability is inherent to measuring human performance. People have different browsing patterns,

Understanding Effect Sizes in User Research

The difference is statistically significant. When using statistics to make comparisons between designs, it’s not enough to just say differences are statistically significant or only report the p-values. With large sample sizes in surveys, unmoderated usability testing, or A/B testing you are likely to find statistical significance with your comparisons. What you need to know

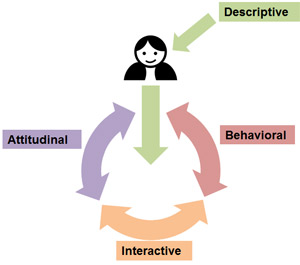

4 Types of Customer Analytics Data to Collect

While they may be called customers, users, buyers, or even guests, they are all people. Organizations quantify, classify, categorize and track people well before they’ve even made a purchase and throughout their customer lifetime. These analytics provide a constant stream of data that should improve the decision making process. To get a better understanding of

Is Observing One User Worse Than Observing None?

Seeing is believing. Observing just a handful of users interact with a product can be more influential than reading pages of a professionally done report or polished presentation. But what if a stakeholder only has time to watch two or just one of the users in a usability study? Are there circumstances where watching some

10 Things That Can Go Wrong in a Usability Test

A lot of planning goes into a usability test. Part of good planning means being prepared for the many things that can go wrong. Here are the ten most common problems we encounter in usability testing and some ideas for how to avoid or manage them when they inevitably occur. Users don’t show up :

10 Ways to Get a Horrible Survey Response Rate

You’ve worked hard designing your survey, you need data to make better decisions for your product, you need people to answer your survey! Unfortunately, in our quest to squeeze the most out of our precious participants, it gets difficult not to commit some survey sins. Inevitably one or a few of these response rate killers

4 Steps to Translating a Questionnaire

An effective questionnaire is one that has been psychometrically validated. This primarily means the items are reliable (consistent) and valid (measuring what we intend to measure). So if we say a questionnaire measures perceptions of website usability, it should be able to differentiate between usable and unusable websites and do so consistently over time. With

Measuring Usability Best Practices in 5 Words

While there are books written on measuring usability, it can be easy to get overwhelmed by the details and intimidated by the thought of having to deal with numbers. If I had to use five words to describe some best practices and some core principles of measuring usability, here they are. 1. Multi-method There are

10 Ways to Conduct Usability Tests with Credit Cards & Personal Data

A key principal of usability testing is that users should simulate actual usage as much as possible. That means using realistic tasks that represent users’ most common goals on the website or app they’ll be working with ‘out in the wild.’ Usability testing is inherently contrived but we still want to provide as realistic a

How to Deal With Quantitative Criticism

It’s often said that you can get statistics to show anything you want. And while this is true to an extent, it’s equally true of any method, qualitative or quantitative. With statistics, however, it’s a bit harder because there is a numeric audit trail that allows others to better understand your assumptions and conclusions. Unfortunately