Was that what you were expecting?

Was that what you were expecting?

How do you feel when you’re pleasantly surprised by the quality of a hotel room, the service at a restaurant, or the features in a new app?

And how do you feel when it takes 20 minutes to pay your bill online (after calling customer service) when you expected it to take a couple clicks? Or that cleaning product you purchased off TV that doesn’t actually clean your clothes as promised?

Customer expectations are closely linked to customer satisfaction and loyalty. Understanding customer expectations helps diagnose problems and leads to higher satisfaction, repeat customers, and customer referrals.

While expectations are important to measure, the best way to measure them is debatable. In fact, there’s even disagreement on how to model the role of customer expectations on satisfaction. Before we talk about measurement, here are three popular approaches for how to model customer expectations:

- Disconfirmation: The experience either falls short or exceeds expectations, which affects a customer’s satisfaction most. This is what I think most people think of when they think of expectations.

- Experiences-only: The most recent experience overshadows prior expectations and has the biggest effect on satisfaction. For example, if you expect your coffee at Starbucks to take a long time to be made and it actually takes a long time, you’re still dissatisfied, even though your expectations were met (or even exceeded).

- Ideal point: Any deviation from what a customer expects impacts satisfaction, even if it’s better than expected. While some research supports this model, I think it likely has limited applications because I’m having a hard time coming up with examples that when my expectations are exceeded, I’m less satisfied.

Like most academic models, all are wrong but some are useful. In measuring the customer experience, I’ve found the disconfirmation and experiences-only models best describe the data I’ve seen.

With the models in mind, here are three approaches for measuring expectations.

Qualitative Expectations

A common technique in moderated usability testing is to ask participants what they expect to see before clicking a link or button or submitting a form. After seeing the result you then ask whether what they actually experienced was what they expected.

The mismatch in expectations can help you diagnose interaction problems. It can be a label, a picture, or more often, a mismatch of the mental model. If you poll enough users, you’ll notice a fair amount of “yeah, that’s what I expected” statements, even though the expectation statements didn’t match what the participant actually experienced. This lends credence to the experiences-only model.

While this sort of expectation analysis delivers more qualitative data, expectations measures are often quantitative.

Difference Scores

A common way of measuring expectations is to ask participants their expectations prior to experiencing a product, website, brand, or service, and then measure their experience after using it with the same scale. These are often referred to as difference scores (because you look at the difference between the responses for each participant). See Chapter 13 in Customer Analytics For Dummies if you’re interested in reading more about it.

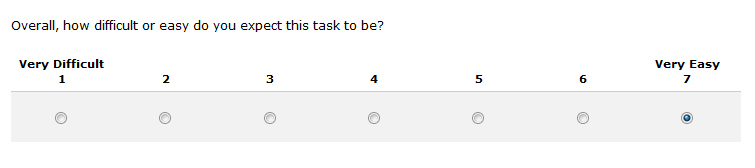

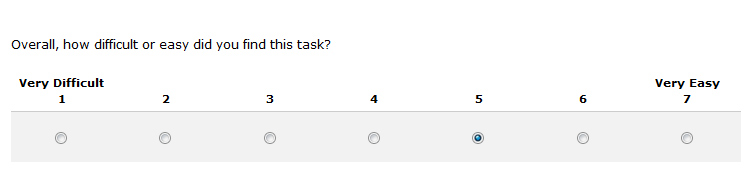

Albert & Dixon used a similar approach when measuring task ease. Participants rate how difficult they expect a task to be, attempt the task, and then rate how difficult it actually was on a 7-point difficulty scale (like the SEQ). Scores can be plotted in a 2×2 grid or examined as difference scores.

For example, the figure below shows a participant expected a task to be “Very Easy.” Then after completing the task, the participant rated it a 5. The result is a difference score of 2 (7-5).

Problems with Difference Scores

As with qualitative expectations in moderated usability testing, difference scores have two pitfalls:

- Biases: Participants have a tendency to under-report differences in order to remain cognitively consistent or even misremember the past. With hindsight bias, respondents want to appear consistent (“yeah, that’s what I expected”) so they adjust their experience scores to match (or more closely match) their expectation score. This bias isn’t necessarily intentional; but like many cognitive biases, it has a way of affecting memory and thinking in unexpected and subtle ways.

- Benchmarks: The post experience ratings can act as a benchmark because they’re generally more salient and available than a person’s earlier expectation rating. This lends credence again to the experiences-only model.

Independent Expectations

One way to avoid the problem of pre-experience measures affecting post-experience measures is to have an independent group of participants provide the ratings.

This is something we did when evaluating task difficulty. To use this approach, you collect data from two reasonably identical and independent groups of participants. Have one group rate their expectations about a task, website, or product experience; have the second group experience the task or product and rate the experience using the same scale.

You can examine the difference between scores and know that a confirmation bias isn’t occurring because one group’s expectations scores aren’t influencing the other group’s experience scores. Be sure the groups closely match in experience and demographics.

Experience Satisfaction Only

Of course, you can also ask participants to only rate their satisfaction with the experience using a number of rating scales (including the SEQ). This likely captures much of their expectations anyway (at least according to the experiences-only model). In other words, participants who rated low in satisfaction didn’t have their expectations met; those who rate higher expectations likely had their expectations met.

Summary

Customer expectations play a major role in understanding customer satisfaction. While it’s unclear how to model satisfaction due to expectations (disconfirmation, experiences-only, or ideal point), you can and should assess customer expectations.

- In moderated usability testing you can diagnose interaction problems by identifying areas where participants expectations don’t match the actual experience (even if the participant thinks they do).

- Differences scores (the gap between experiences and expectations) are an effective method for insight into exceeded or missed expectations.

- Difference scores are likely skewed by cognitive biases (misremembering and cognitive dissonance) and benchmarking and may not offer a true picture of expectations.

- Different groups of participants that rate expectations and experiences offer difference scores that aren’t affected by biases and benchmarking.

- Experience scores alone likely capture much of customers’ expectations.