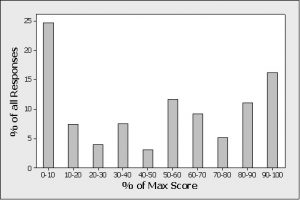

What Do You Gain from Larger-Sample Usability Tests?

We typically recommend small sample sizes (5–10) for conducting iterative usability testing meant to find and fix problems (formative evaluations). For benchmark or comparative studies, where the focus is on detecting differences or estimating population parameters (summative evaluations), we recommend using larger sample sizes (20–100+). Usability testing can be used to uncover problems and assess the