When thinking about user experiences with websites or software, what is the difference between capabilities and functions? Is there any difference at all?

When thinking about user experiences with websites or software, what is the difference between capabilities and functions? Is there any difference at all?

In software engineering, a function is code that takes inputs, processes them, and produces outputs (such as a math function). The word capability doesn’t have a formal definition, but it most often appears in the phrase Capability Maturity Model, a formal model that describes the capability and maturity of a software development team.

For everyday usage, Merriam-Webster defines the words as

- Capability: The facility or potential for an indicated use or deployment.

- Function: The action for which a person or thing is specially fitted or used or for which a thing exists.

The everyday definitions are not identical, but they are similar, with both referring to the extent to which something can be used for a given purpose.

We became interested in this topic as part of our ongoing research into alternative forms of the UMUX-Lite Usefulness item.

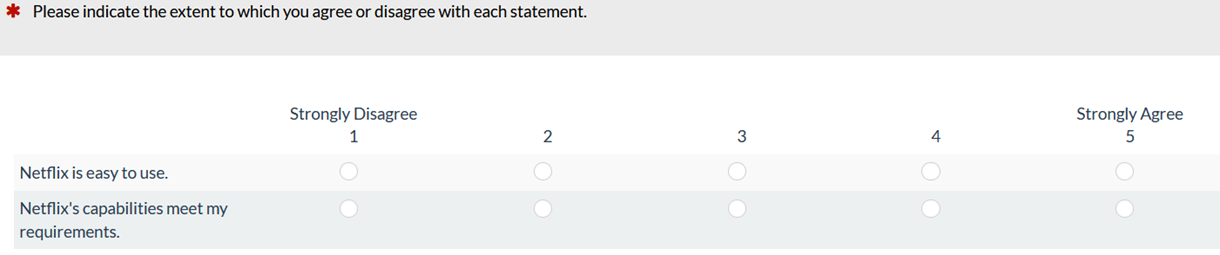

The UMUX-Lite is an increasingly popular UX questionnaire. It has only two items, one for Perceived Ease-of-Use and one for Perceived Usefulness (the same as the factors of the well-known Technology Acceptance Model), and it has been shown to correlate significantly and correspond closely with concurrently collected UX and loyalty metrics. Figure 1 shows the standard version of the UMUX-Lite.

Figure 1: Example of the standard UMUX-Lite (five-point version; created with MUIQ).

Over the past six months, we’ve researched different ways of simplifying the Usefulness item had a significant effect on the resulting scores. So far, there have been no significant differences in response option distributions or means for the standard form and these alternates:

There are research contexts in which the word functions might be a better fit than capabilities, functionality, or features, so we ran a study to see whether we could include it as an alternative form of the UMUX-Lite Usefulness item (while continuing to use “needs” in place of “requirements”).

The Study

We included this new version of the Usefulness item ({Product}’s functions meet my needs”) as part of a survey of the UX of food delivery services collected from December 2020 through January 2021 using an online U.S.-based panel (n = 212). The survey was programmed in MUIQ so that the two versions of the items were randomly placed and would not appear next to each other.

Results: Comparison of Means

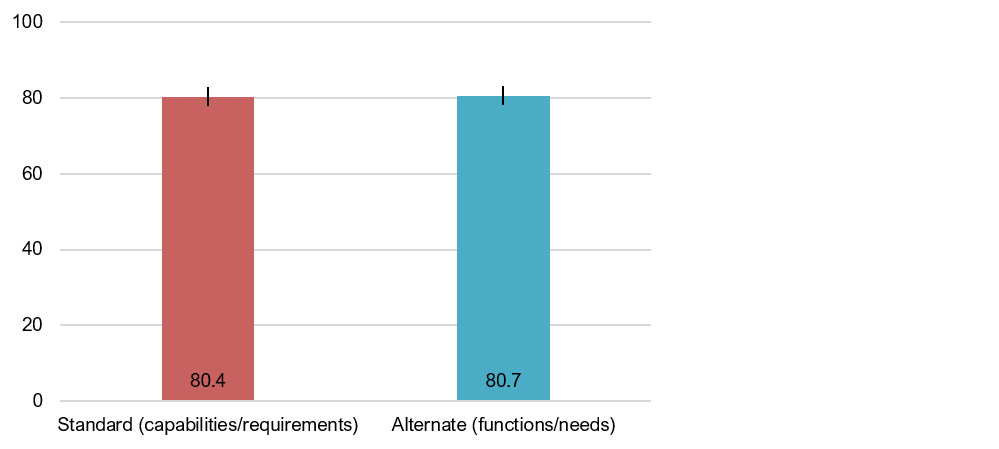

Figure 2 shows the mean UMUX-Lite scores from the study (within-subjects comparison). There was no significant difference between the standard and simplified UMUX-Lite scores (t(211) = .47; p = .64).

Figure 2: Mean UMUX-Lite scores (with 95% confidence intervals).

The mean difference was −.24, with a 95% confidence interval from −1.22 to .75. The confidence interval contains 0, so it’s plausible that there is no real difference in means. If there is a real difference, the limits of the confidence interval show that it’s small, unlikely to exceed 1.22 on the 0-100–point UMUX-Lite scale.

Results: Comparison of Distribution of Response Options

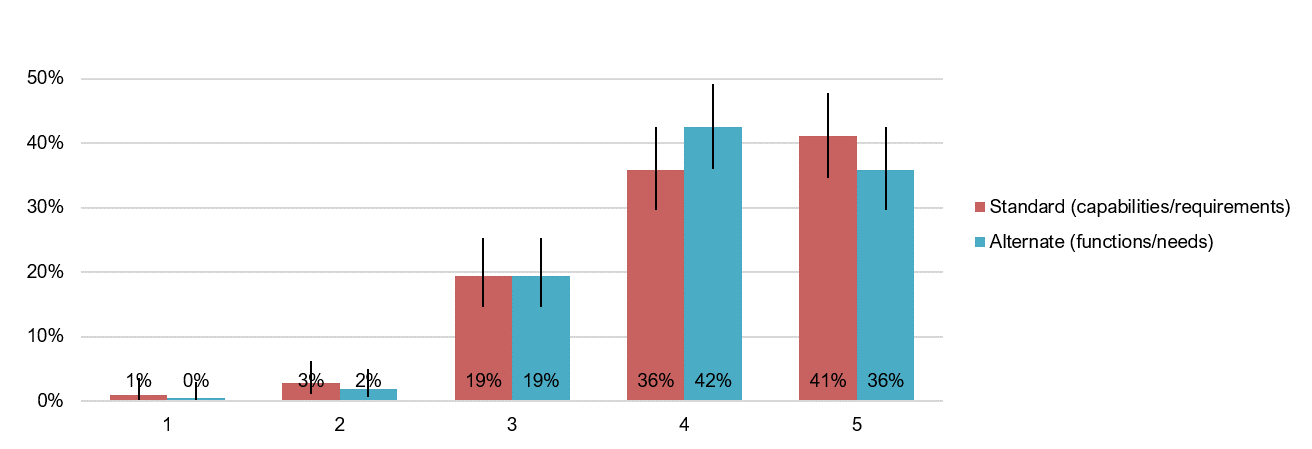

Figure 3 shows the distributions of response options for the standard and alternate versions of the Perceived Usefulness item. The response patterns were similar, and for each response option, there was a substantial overlap of 95% confidence intervals.

However, compared to the other variations of the Usefulness item that we’ve assessed, this variant showed the largest shift in the distribution of response options. Specifically, the shift of about 5 percentage points between the strongly agree (5) and agree (4) options was larger than the roughly 1–3% shift we’ve seen with other variants.

In addition to the mean, it’s common in UX research to assess data collected using multipoint scales with top-box scores. When there are five response options, both top-one (percentage of 5s) and top-two (percentage of 4s and 5s) box scores are commonly used. For these data, the top-two-box scores were 77% for the standard version and 78% for the alternate. As expected, a McNemar test of the difference between these percentages was not statistically significant (mid-probability p = .60; 95% confidence interval around the difference of 1% ranged from −3.8 to 6.6%).

The top-one-box scores, as shown in Figure 3, were 41% for the standard version and 36% for the alternate. In this case, the McNemar test was statistically significant (mid-probability p = .03; 95% confidence interval around the difference of 5% ranged from .5 to 9.8%).

Figure 3: Distributions of Usefulness response options from the food delivery survey with 95% confidence intervals.

We will look to replicate these results in future research because this is the first time we’ve detected any significant difference in an alternate version of the Perceived Usefulness item. As the famous statistician Sir Ronald Fisher wrote in 1929 (p. 191),

The test of significance only tells him what to ignore, namely all experiments in which significant results are not obtained. He should only claim that a phenomenon is experimentally demonstrable when he knows how to design an experiment so that it will rarely fail to give a significant result. Consequently, isolated significant results which he does not know how to reproduce are left in suspense pending further investigation.

Results: Scale Reliabilities

Scale reliability for both versions, measured with coefficient alpha, was .74. This exceeds the typical reliability criterion of > .70 for research metrics and supports the use of either version.

Summary and Takeaways

The two items of the standard UMUX-Lite questionnaire measure (1) Perceived Ease-of-Use: “The system is easy to use” and (2) Perceived Usefulness: “The system’s capabilities meet my requirements.”

The standard wording of the Perceived Ease-of-Use item is simple and straightforward, but the standard wording of the Perceived Usefulness item, while grammatically simple, contains two infrequently used, multisyllabic words—”capabilities” and “requirements.” In this and two previous studies we have simplified the wording of this item.

The results of these studies have demonstrated the practical measurement equivalence of three alternative forms of the UMUX-Lite Perceived Usefulness item. UX researchers and practitioners can use any of these alternates in place of the standard version when computing means or top-two-box scores. Researchers who plan to compute top-one-box scores should avoid the third alternate (at least for now, pending replication).

- The system’s functionality meets my needs.

- The system’s features meet my needs.

- The system’s functions meet my needs.