Happy new year from all of us at MeasuringU!

In 2019 we posted 46 new articles and added significant new features to MUIQ—our UX testing platform—including think-aloud videos with picture in picture and an advanced UX metrics dashboard.

We hosted our seventh UX Measurement Bootcamp, and MeasuringU Press published Jim Lewis’s book, Using the PSSUQ and CSUQ in User Experience Research and Practice.

The topics we wrote about in 2019 include UX metrics, methods, the Net Promoter Score, and UX Industry Benchmarks. Here’s a summary of those articles with some takeaways from each.

UX Methods and Measurement

The field of User Experience continues to mature, and with it comes an evolution of methods, but there are many open research questions we explored.

- What Is the Purpose of UX Measurement?: Don’t expect UX metrics alone to tell you what to fix: they are the outcome of a bad experience, not the cause. Don’t use measures to tell you what to do; you do things and see whether the measures changed.

- Where Do UX Research Methods Come From?: While the field is relatively new, the roots of UX methods date back a century and are influenced by psychoanalysis (think-aloud testing), standardized measurement (SUS and SUPR-Q), and methods for testing cognitive impairment (card sorting).

- Should You Love the HEART Framework?: The HEART framework (Happiness, Engagement Adoption and Retention, and Task Success) extends the thinking of the Technology Acceptance Model (TAM), which is itself an extension of the Theory of Reasoned Action, and uses many common UX metrics.

- Understanding Expert Reviews and Inspection Methods: There’s more than one kind of expert review: Heuristic Evaluations, Guideline Reviews, Cognitive Walkthroughs and Pluralistic Walkthroughs are a few of the varieties of expert reviews/inspection methods.

- How the PURE Method Builds on 100 Years of Human Factors Research: The Practical Usability Rating by Experts (PURE) method shares and extends the methods of KLM, cognitive walkthroughs, and heuristic evaluations.

- How to Assess the Quality of a Measure: We created a list of fifteen psychometric (e.g., valid, reliable, efficient) and practical (e.g., length, complexity of scoring) considerations to use to assess the quality of a questionnaire or measure.

- The Importance of Replicating Research Findings: We attempted to replicate nine claims or methods. Some we found similar results for (e.g., promoters do recommend more) and others we failed to replicate (e.g., the CE11 is not “superior” to the NPS in diagnosing problems).

- Sample Size in Usability Studies: How Well Does the Math Match Reality?: Our review of seven usability problem data sets with relatively large sample sizes found that, as predicted mathematically, the first five users uncovered the vast majority (91%) of the most common issues (but fewer of the less common issues).

Attitudes, Motivations, and Variables

The user experience is both actions and attitudes. Measuring attitudes helps predict (and explain) behavior.

- Why You Should Measure UX Attitudes: Attitudes can be thought of as having three parts: what people believe (cognitive), how they feel (affective), and what they intend to do (conative/behavioral).

- Do Attitudes Predict Behavior?: Attitude is a compound construct composed of people’s thoughts and feelings, which create intentions that then affects behavior (but attitudes are not perfect predictors).

- Linking UX Attitudes to Future Website Purchases: We examined the data from a two-year longitudinal exploratory study and found SUPR-Q extremes may predict number of purchases and purchase rates.

- Does a Better User Experience Lead to More Purchasing?: The results of our longitudinal study showed that participants who have a poor experience on a website are less likely to purchase on it again, purchase fewer times, and spend less than those with the best experiences.

- How Accurate Is Self-Reported Purchase Data?: Our longitudinal study found that self-reported purchase rates were accurate (compared to uploaded receipts), but the amount reported spent was less accurate. We also found better reported experiences preceded more reported transactions.

- Why Do People Call Customer Service?: The majority of companies people reported calling were telecom conglomerates, retailers, banks, and insurance companies. Reasons differ by company type but login issues were a common one.

- Understanding Variables in UX Research: Dependent variables are what you get, independent variables are what you set, and extraneous variables are what you can’t forget (to account for).

- Picking the Right Dependent Variables for UX Research: Start with metrics that address research questions and align with business needs, then look for existing measures that are psychometrically valid and meaningful to the customer and business. It takes practice.

- What Is a Strong Correlation? A review of several published correlations across many fields found that even numerically “small” correlations are both valid and meaningful when the contexts of impact (e.g., health consequences) and the effort and cost of measuring are accounted for.

Net Promoter Score

The Net Promoter Score may be overhyped, but it’s wrong to dismiss or ignore this metric. We’ve conducted some of the most comprehensive research on the Net Promoter Score to help you weed through all the noise on social media.

- Do Promoters Actually Recommend More? We conducted a longitudinal analysis and found that while at most around half of customers recommend, most recommendations do come from promoters. Promoters are between 2 and 16 times more likely to recommend than detractors.

- Effects of Labeling the Neutral Response in the NPS: Adding the neutral point label appears to attract a small percent of other “detractor” responses from the 0 to 4 range when using non-customers only.

- How Does Did Recommend Differ from Likely to Recommend?: Future intent may be a better predictor than past behavior, but asking both is likely the best predictor.

- Comparing the Predictive Ability of the Net Promoter Score and Satisfaction in 12 Industries: In our comprehensive analysis of dozens of companies we found that the NPS was nominally a slightly better predictor of growth than the ACSI.

- Will You Recommend or Would You Recommend?: This subtle change to the wording of the likelihood to recommend item had a negligible effect on scores.

- Has the Net Promoter Score Been Discredited in the Academic Literature?: Our meticulous review of 22 academic papers qualified some of the loftier claims made of the NPS, but the score hasn’t been discredited. Even the strongest academic critics of the NPS think you should ask about recommend intentions. Just don’t make the NPS the ONLY thing you ask.

- Assessing the Predictive Ability of the Net Promoter Score in 14 Industries: Our comprehensive analysis found a modest correlation (r = .31 to r = .35) between Net Promoter Scores and growth in 11 of 14 industries for the immediate two-year and four-year future periods.

Metrics

- 10 Things to Know About the PSSUQ: This is the second-most-used questionnaire after the SUS. It was developed by Jim Lewis in the 1980s to measure the usability of an interface and includes 16 items and three subscales.

- 10 Things to Know about the Customer Effort Score: This single-item measure of effort has evolved over time from five points to seven. Reducing effort may lead to improved loyalty, but more validation data is needed.

- What Is Customer Delight? There are competing models of delight, with some clear overlap. For most theories, delight can be thought of as a positive reaction to an unexpected experience.

- How Do You Measure Delight?: Our review of the literature uncovered at least ten different scales and multiple methods of collection. In most studies, multiple measures were used (often a combination of 2+ rating scales and adjective frequency lists).

- 10 Things to Know about the Microsoft NSAT Score: This four-point measure is a measure of satisfaction. It likely correlates with, but isn’t necessarily a replacement (but a complement) for, the NPS.

- 10 Things to Know about the Technology Acceptance Model: This influential model posits that attitudes toward ease and usefulness predict whether people use (adopt) a technology.

- What Is the CE11 (And Is It Better than the NPS)?: If you’re using the NPS to uncover problems or tell you what to fix (you shouldn’t), you’ll find the CE11 (11-item scale) to be just as ineffective. Our analysis found that while the CE11 was reliable and valid, it was less able to discriminate between website differences than the single LTR item.

- Validating a Lostness Measure: Lostness is a comparison of the path taken relative with the happy path. Our study corroborated some thresholds for identifying when a user is “lost.” Lostness may be best as a secondary measure to other usability metrics, notably task completion and perceived ease.

- 10 Things to Know about the NASA TLX: This widely used 16-item measure of workload correlates with the SEQ and has some limited benchmarks, but it varies in how it’s administered and scored.

Rating Scales and Surveys

Surveys (and their ubiquitous rating scales) remain an essential method for measuring the user experience.

- Do Survey Grids Affect Responses?: An analysis of the literature, and our studies on the effects of displaying questions in a grid versus displaying them on separate pages, found that presenting items in a grid slightly lowers score (2%–3%).

- Very vs. Extremely Satisfied: Varying the top-box response between “very” and “extremely” had a negligible impact on scores.

- Is a Three-Point Scale Good Enough?: A review of the published literature shows overwhelmingly that three-point scales stifle respondents and tend to be less reliable and valid.

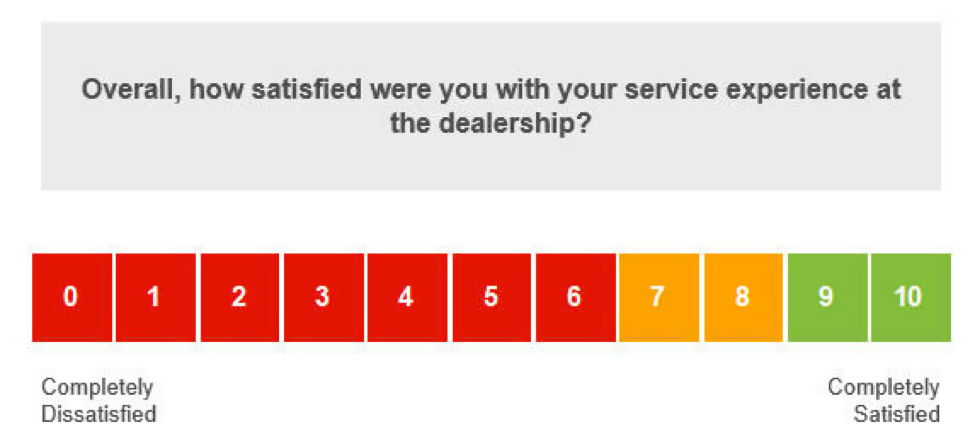

- Does Coloring Response Categories Affect Responses?: Surprisingly, adding color to 11-point satisfaction and intent-to-recommend scales had only a small (1%–3%) effect on scores.

- Five Scales to Measure Customer Satisfaction: There isn’t a single way to measure customer satisfaction. There are three broad ways to measure it: satisfaction scales, performance scales, and disconfirmation scales.

- Can You Use a 3-Point Instead of an 11-Point Scale for the NPS?: Net Promoter Scores derived from three-point scales differ substantially from those derived from eleven-point scales because of the loss of information (especially the extreme responses, which are most predictive of behavior).

- What Motivates People to Take Free Surveys? Across two studies we found that interest, effecting change, and intrinsic motivation are the key motivators for why people take surveys.

UX Industry Reports

We conducted six mixed-methods benchmark studies using the SUPR-Q and Net Promoter Scores. We also tried out our newest feature on MUIQ, think-aloud video with picture-in-picture. Thanks to all of you who have purchased our reports. The proceeds from the sales of these reports fund the original research we post on MeasuringU.

- The UX of Pet Websites: Our retrospective benchmark and think-aloud analysis found Chewy.com leads and PetMeds lags in UX scores.

- The UX of Outdoor Retail Websites: For people buying gear, the web-to-store experience is a differentiator (if done well).

- The UX of Online Job Searching Websites & Apps: Most job searches happen online, and we found that misleading job postings and spam degrade some website experiences.

- The UX of Dating Websites & Apps: Dating is hard; the user experience is harder on many dating websites (especially when users are concerned about scams).

- The UX of Automotive Websites: These websites have a good UX, but the emphasis on used car sales make it difficult to find information about new cars.

- The UX of Airline Websites: On these highly used websites, filters are a challenge and an opportunity, the seat selection process can be confusing, and people resist paying for non-premium seats.

We’ll see you in 2020! We have plenty of new articles planned and two boot camps, including our annual event in Denver.