It’s been 25 years since the development of the Heuristic Evaluation—one of the most influential usability evaluation methods.

It’s been 25 years since the development of the Heuristic Evaluation—one of the most influential usability evaluation methods.

It’s cited as one of the most-used methods by practitioners.

Yet its co-creator, Rolf Molich, had recently said it was “99% bad.”

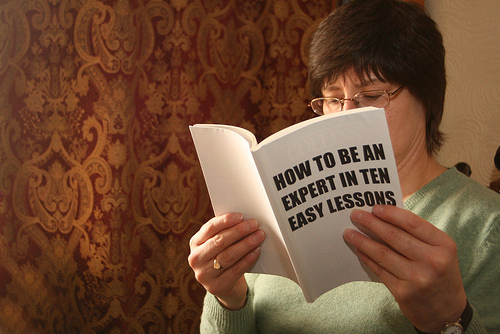

To understand what would drive such a comment, you need to understand the history and purpose of the Heuristic Evaluation and the broader technique called an expert review.

Even a minimally experienced researcher can review an interface with a set of tasks and identify many potential pitfalls. Such a technique has come to be known as an expert review.

There’s some debate[pdf] about whether a Heuristic Evaluation is more effective than an expert review. But what’s clear is that the name Heuristic Evaluation and what it implies certainly sound better than what an “expert review” implies— more like someone’s fickle and subjective opinion than a “science” of evaluation.

How It Was Developed

Usability testing has long been the go-to method for uncovering problems in an interface, from early stage prototypes to completed products.

But you don’t need to wait for users to uncover problems with your product. You can use many tools and methods to uncover and correct problems before any users encounter them (in testing or after release).

One of the first such methods was principle-based guidelines. Interface guidelines have been in place since before Windows 3.1. Both developers and UX researchers can use these guidelines to make design decisions or use them to judge the quality of a finished interface based not on opinions, but reasoned guidelines.

One problem with guidelines is that they have a tendency to get huge. One of the more influential set of guidelines was by Smith & Mosier, which contains almost 1,000 guidelines! But even these bloated documents can’t cover every interface nuance. Instead of trying to identify every situation in a lengthy document, an alternative approach is to provide high-level guiding principles based on research.

Developers can guide their design decisions based on these principles; researchers can also evaluate an interface for its compliance. Rolf Molich and Jakob Nielsen created just such a method, called the Heuristic Evaluation, in 1990. In this context, a heuristic is a principle or rule that leads to better usability, such as “Minimize what a user has to memorize.” (Don’t confuse the Heuristic Evaluation with the use of heuristics in psychology literature, which is simply a mental shortcut).

Molich and Nielsen[pdf] derived their original set of heuristics by categorizing reported usability problems and using their own personal experience with good and bad experiences. Their list underwent some modifications over the last 25 years, but resulted in the widely cited Nielsen’s 10 heuristics. The 10 heuristics are short, high-level guidelines that can be applied to any interface.

There have been heuristics developed by other researchers (for example, Weinschenk and Barker), but these ten are the de facto standard. If researchers say Heuristic Evaluation, it’s assumed they’re using Nielsen and Molich’s 10s unless stated otherwise.

How It Works

In a Heuristic Evaluation, evaluators work independently to inspect an interface against the heuristics. Over the years, research has shown that using more evaluators is better at uncovering more problems and ideally the evaluators also know something about usability AND the product domain they’re evaluating.

The shorter and higher-level heuristics that Nielsen and Molich developed are a double-edged sword. The much shorter principles mean evaluators can get through them relatively quickly. The disadvantage is that they are written at a high level that requires more interpretation and judgment. And where there’s judgment, there’s disagreement.

For example, one heuristic is the “Match between system and the real world.” The instructions are:

The system should speak the users’ language, with words, phrases, and concepts familiar to the user, rather than system-oriented terms. Follow real-world conventions, making information appear in a natural and logical order.

Well, who decides what the users’ language is, and what order is natural and logical? Reasonable evaluators and developers can disagree on how that impacts things like menus, labels, and function names. This has led to a number of studies showing that different evaluators working independently tend to uncover different usability issues. But the heuristics themselves should help reduce disagreements by focusing the evaluation on the rules and away from opinions.

The Judge Writing the Law

The Heuristic Evaluation is in many respects like the U.S. constitution. It’s the oldest and shortest document governing any country. Many constitutions are a long laundry list of rights that are rewritten frequently; they’re more like guideline lists. The United States laws, especially the Bill of Rights, are broadly written and read much like Nielsen’s 10 heuristics. But they require interpretation. For example, the First Amendment guarantees freedom of speech:

Congress shall make no law respecting an establishment of religion, or prohibiting the free exercise thereof; or abridging the freedom of speech, or of the press; or the right of the people peaceably to assemble, and to petition the Government for a redress of grievances.

But what defines speech? A movie, a photograph? What about hurtful words? Reasonable people can disagree on the interpretation. But the law as written in the constitution, like a heuristic, helps constrain the interpretation from wildly differing opinions of the day. Judges independently interpret the law as written and don’t rewrite the law even if they disagree with them. (By the way, the day of this post also happens to be the 224th anniversary of the ratification of the Bill of Rights..coincidence?)

When an evaluator identifies an issue in a Heuristic Evaluation, it should be based on its non-compliance to one of these heuristics. And this becomes the crux of the problem. What happens if you deviate from the heuristics by identifying problems you think should be fixed?

To Rolf, it’s like a judge writing the law. I recently interviewed Rolf on this and other topics, and he said:

To conduct a Heuristic Evaluation, you need to put on blinders and view the world through the 10 heuristics. You reject anything that does not match the 10 heuristics. If you do anything but that, if you stumble across a usability problem, a huge, huge usability problem, that does not match any of the heuristics and as an orthodox Heuristic Evaluation, you must not report it.

If you’re doing anything else that 99% of the usability professionals are doing, you’re doing a review or inspection without the word heuristic in it. Then it’s fine. Then you can use your knowledge. Then you can use your experience… but please don’t call it a Heuristic Evaluation.

I asked Rolf whether his concern was just the term being misappropriated, and he clarified that it’s a little of that, but it’s practitioners making their expert reviews sound more authoritative than they actually may be.

They use heuristics as an excuse for reporting whatever they want to and that’s where I get a little bit afraid.

The idea is that you are using—when you are using the Heuristic Evaluation method —you rely on proven heuristics, which supposedly gives your evaluation results a higher quality than if they were just made up of by some would-be expert or would-be experienced professional.

But if you use it, if your usability evaluation is just a Heuristic Evaluation by name and not by value then we might have a problem and that’s also why I’m very much against people who used Heuristic Evaluation but invent the heuristics themselves. It’s just like a judge writing the law.

Summary

It’s been 25 years since the development of the Heuristic Evaluation method by Molich and Nielsen. Strictly speaking, in a Heuristic Evaluation, an evaluator only identifies problems when viewed through the heuristics (aka rules).

There’s nothing wrong with inspecting an interface without heuristics; just don’t call it a Heuristic Evaluation, call it an expert review. Rolf isn’t suggesting that you don’t report usability issues that fall outside the heuristics, but make it clear it’s your judgment; readers of the report can evaluate that separately.

Rolf sees the method as valuable, but 99% of what he sees is an expert review being called a Heuristic Evaluation (which is why he wryly called it 99% bad– an inside joke to his co-author). While it may sound better to call your expert review a Heuristic Evaluation, that title implies a more narrowly defined view; doing so is like a judge writing the law.