The first questionnaires appeared in the mid–18th century (e.g., the “Milles” questionnaire). Scientific surveys have been around for almost a hundred years.

The first questionnaires appeared in the mid–18th century (e.g., the “Milles” questionnaire). Scientific surveys have been around for almost a hundred years.

Consequently, there are many sources of advice on how to make surveys better. The heart of each survey is the questions asked of respondents. Writing good survey questions involves many of the principles of good writing, in addition to survey-specific guidelines and advice.

We’ve reviewed recommendations, research, and guidelines for surveys and questionnaire design from several sources and reconciled what we learned with our own research and experience conducting surveys (see sources at the bottom of the article).

Many recommendations and guidelines come from social science and political opinion research, which often includes charged and sensitive topics, or educational research, which focuses on assessments of knowledge. These fields rarely involve industrial research surveys, so we’ve selected and modified the guidance to better fit the domain of the UX or CX researcher.

One of the most important objectives in survey writing is to communicate clearly with respondents. Here are seven recommendations to improve the clarity of your survey questions.

1. Keep questions short.

Almost all the sources we reviewed recommend question brevity. Long questions take long to process, which increases the completion time. We (and others) have found that survey completion time is a strong predictor of drop out, so there is a strong incentive for researchers to ask as few survey questions as possible and to keep questions short. But how short is short enough, and when does a question become too long?

The literature includes some suggested question lengths. For example, Johnson and Morgan (2016) suggest keeping questions under 25 words, and one of the earliest recommendations (Payne, 1951) suggests questions be fewer than 20 words. Instead of word count, Peterson (2000) recommends using no more than three commas.

There may be an exception to the shorter-is-better rule in situations when respondents need to recall information that isn’t easy to remember. There is some evidence (conducted on in-person administered questionnaires) that some redundancy (and lengthy questions) can help with recall.

While we’re not sure how accurate a guideline about the number of words or commas can be at identifying brevity (a topic for future research), we do think it’s a good idea to keep questions short by removing superfluous words and connecting phrases. It makes sense to examine questions with more than 25 words to ensure that all the words are necessary.

One way to keep questions short, regardless of the word count, is to remove unnecessary words. Some of the usual culprits suggested by Dillman et al. (2014) include

- Due to the fact that: Replace with Because or That.

- At this point in time: Replace with Now.

- If conditions are such that: Replace with If.

- Take into consideration: Replace with Consider.

When it comes to question writing, strive for Hemingway over Proust.

2. Use simple language.

While short is good, short and simple is better. If a simple word will do, use it over a multisyllabic, complex word. This principle applies to both question stems and response options.

After all, reducing the number of words is only one way to shorten a sentence. Another is to reduce the number of syllables. The average time required to speak a syllable is about 200 ms, so a five-syllable word takes five times as long to say as a one-syllable word. Multisyllabic words are also difficult to read because they’re generally less familiar and harder to decode. Standard word processors don’t provide syllable counts, but there are some online tools.

Our recent research on the UMUX-Lite provides a good example of the benefits of using simpler words. The original wording of the usefulness item was “The system’s capabilities meet my requirements” (13 syllables). We found the simpler “The system’s features meet my needs” (8 syllables) had nearly identical means and frequency distributions, demonstrating that we could obtain equivalent measurement with a shorter, simpler question.

Several sources recommend assuming a middle school (6th to 7th grade) reading level. Doing so will also help respondents who are answering in a language other than their primary language.

3. Prefer high- over low-frequency words.

A more specific approach to writing simple questions and response options is to use common words. Words that occur frequently in a language are more familiar and tend to require less mental processing. Conversely, less common words require more processing time and result in lower comprehension. Examples of infrequently used words and common alternatives are

- Utilize vs. use

- Discrepancies vs. differences

- Affluent vs. wealthy

- Irate vs. angry

- Be cognizant of vs. know

- Rectify vs. correct

- Top priority vs. most important

While you might not know the actual frequency of a word, a little testing may reveal words that are troublesome to respondents. For example, Item 8 in the System Usability Scale (SUS) has evolved from “I found the system very cumbersome to use” to “I found the system very awkward to use” after research found some participants struggled with “cumbersome.”

The traditional approach in linguistics to estimate word frequency has been to create and analyze a corpus of text. Few of these formal corpora are available without cost to researchers, but you can use search engines to estimate relative word frequency.

For example, on January 15, 2021, a Google search of “cumbersome” had 28,000,000 results, while “awkward” had 169,000,000 results (about six times as many). “Utilize” had 627,000,000 results, and “use” had 15,520,000,000 (about 24 times more popular).

4. Use the respondents’ vocabulary.

UX researchers often survey specific populations rather than general consumers. For example, in research MeasuringU has conducted over the past few months, we have focused on teachers, students, daily users of online streaming, meeting and event planners, IT decision-makers, gamers, automotive fleet managers, and UXPA members.

In general, you’ll want to avoid jargon (e.g., value proposition, key driver, delighter) that may be familiar to you as a researcher but not to the respondent. However, if your target respondents are familiar with certain jargon or terms, then you’ll want to match their vocabulary. This is an extension of Nielsen Heuristic #2, which is to “speak the users’ language.”

Avoid jargon and technical language unless participants understand it, and it makes questions clearer. If the survey is for IT decision-makers, you can likely use terms such as “identity management” and “infosec.” Gamers seem to have their own language, so using terms such as afk, lul, gg, and sus may be warranted in some situations. UX researchers will, of course, interpret SUS differently from gamers.

5. Minimize the use of acronyms.

Is an MVP a Minimum Viable Product or the Most Valuable Player? Although acronyms shorten questions, there is always a chance that some respondents won’t know what an acronym stands for.

This guideline has exceptions, of course. Some acronyms are used so frequently by a set of respondents they have become acceptable. Some (not many) acronyms are so universally understood that you never need to spell them out: US, NATO, SCUBA, RADAR. In general, however, if you find yourself using an acronym, consider spelling it out to improve clarity.

6. Avoid complicated syntax.

Some surveys try and pack a lot into one question to reduce the number of questions or use uncommon syntax to reduce question length. Try to avoid overly complex questions with complicated grammatical structures.

For example, Tourangeau et al. (2000) warn against what they call adjunct wh-questions (starting with who, what, when, or where) and constructions with embedded clauses.

Adjunct wh-questions

The following wh-question, while grammatically correct and having fewer than 20 words, is hard to process:

When did you tell your account representative your software license needed to be renewed?

Here’s a syntactically simpler version with the same length:

When did you tell your account representative that you needed to renew your software license?

The first version is complicated for at least two reasons. First, it has characteristics of a garden path sentence: it might appear that “your software license” is a direct object of the verb “tell,” but it’s actually the subject of a subordinate clause, paired with “needed.” Second, the second clause is in passive voice (discussed below): the receiver of the action (“license”) appears before the verb that acts on it (“renewed”), requiring additional cognitive processing to understand.

Embedded clauses

Here are two versions of an item for an agreement (Likert) scale, with and without an embedded clause.

The task that I just completed in this study was easy.

This task was easy.

In the version with the embedded clause, seven words are between the subject and the verb. In the alternate wording, the verb immediately follows the subject (shorter and simpler).

Left-embedded syntax

Similarly, Lenzner et al. (2010) recommend avoiding left-embedded syntactic structures. These occur when respondents have to read through many adjectives, adverbs, and/or prepositional phrases before getting to the critical part of a question. Consider the following two agreement items.

With left-embedded syntax:

To what extent do you agree or disagree with the following statement?

Even if it would cost them some money and might make their code more complex because the “rules” are a complicated mix of industry regulations and federal, state, and local laws, Twitter, as one of the most influential social media platforms, should comply with industry guidelines.

Without left-embedded syntax:

To what extent do you agree or disagree with the following statement?

Twitter, as one of the most influential social media platforms, should comply with industry guidelines even if it would cost them some money and might make their code more complex because the “rules” are a complicated mix of industry regulations and federal, state, and local laws.

The advice to avoid left-embedded syntax is similar to the journalistic practice to avoid burying the lead (or lede): Don’t take so long to get to the point.

7. Avoid passive voice.

This is one of the best-known writing guidelines. Ever since it appeared in Strunk and White’s (1959) Elements of Style, generations of writing students have struggled to avoid passive sentences. However, passive voice is part of the language for a reason. Although writing in active rather than passive voice is generally good practice, you should understand whether active or passive voice is the better choice.

Here’s a quick review of passive voice: (1) The object of an active sentence becomes the subject of a passive sentence, and (2) some form of the verb “be” appears before the main verb. If an object appears in a passive sentence, it does so in a “by” prepositional phrase after the verb. For example,

- Active voice: This system met my needs.

- Passive voice with object: My needs were met by this system.

- Passive voice without object: My needs were met.

According to trace theory, a passive sentence requires more effort to parse because a reader or listener must process a trace that is in the passive version of the sentence, co-indexing the passive verb with its active-voice object (which was moved from its normal position following the verb).

When to use active voice

Unless there is a compelling reason to do otherwise, survey writers should use active voice. Passive voice often leads to wordier, weaker writing. Passive sentences rewritten as active can be as much as 40% shorter. Passive voice can be vague. When a sentence lacks an object, the writing can seem evasive, as if the writer is avoiding responsibility. Readers prefer technical documentation with reduced use of passive voice.

When to use passive voice

Passive voice is appropriate in some situations.

- Emphasize the receiver of the verb when the performer of the verb is unimportant or unknown (“The ball was caught”).

- Avoid the use of personal pronouns in scientific writing, although this practice has been changing (“The expected effect was not found”).

- Avoid naming a responsible party (“Mistakes were made”).

- Politely inform customers of something they must do (“Check-in must be completed 30 minutes before the flight”).

What about survey questions?

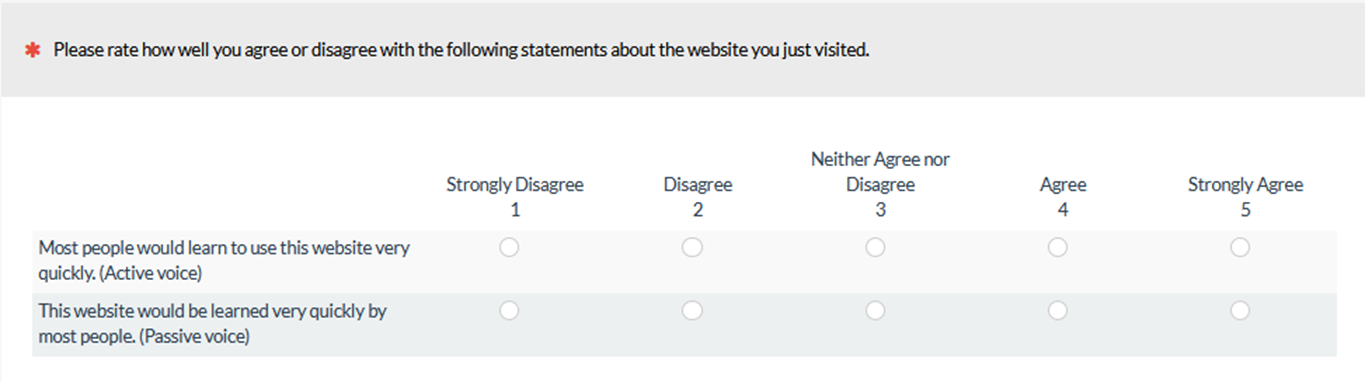

Consider the two versions of the item in Figure 1. They both have 10 words, but the version in active voice is easier to read.

Figure 1: Passive and active versions of an agreement item.

We couldn’t invent an example where passive voice was better than active voice in a survey question, probably because the situations in which passive is appropriate are rare when writing survey questions. There might be times when the focus of the question should be on the receiver rather than the performer of an action, but survey question writing is not scientific writing, and using passive voice to obscure responsibility isn’t consistent with clear writing.

When writing survey instructions (rather than questions), there may be times when passive voice could soften an otherwise harsh-sounding sentence. For example, here are active (socially direct) and passive (socially indirect) versions of the same message:

You must answer every required question to receive payment for participation.

To receive payment for participation, every required question must be answered.

Summary

To improve the clarity of your survey questions:

- Keep questions short.

- Use simple language.

- Prefer high- over low-frequency words.

- Use the respondents’ vocabulary.

- Minimize the use of acronyms.

- Avoid complicated syntax.

- Avoid passive voice.

As UX practitioners, one of our goals is to achieve ease of use for the artifacts we design and evaluate, including the surveys we design and administer. It might be hard to measure the effect of any one of these guidelines on the respondent experience, but there is likely a cumulative positive effect across a large number of survey questions.

References

The Complete Guide to Writing Questionnaires by David Harris (2014).

Internet, Phone, Mail, and Mixed-Mode Surveys by Dillman et al. (2014).

Survey Scales by Johnson and Morgan (2016).

The Survey Research Handbook by Alreck and Settle (2003).

Designing Quality Survey Questions by Robinson and Leonard (2019).

Constructing Effective Questionnaires by Robert Peterson (2000).

Questionnaire Design by Ian Brace (2018).

Cognitive Burden of Survey Questions and Response Times [PDF] by Lenzner et al. (2010).