Beyond speculation and hyperbole, we’ve been exploring how ChatGPT can be used in UX research.

Beyond speculation and hyperbole, we’ve been exploring how ChatGPT can be used in UX research.

In two earlier articles, we conducted analyses that suggested ChatGPT may have a role, given the right research context. In the first analysis, we found that ChatGPT-4 was able to assist researchers in sorting open-ended comments.

In the second analysis, we assessed how well ChatGPT’s card sorting results matched with the results made by a group of users attempting the same card sort. We found that ChatGPT could sort items into groups and appropriately name the groups in a way comparable to the groups and names generated by UX researchers analyzing data collected from participants.

Given that ChatGPT produced reasonable card sorting results, is it also possible that it could do the opposite and provide reasonable tree testing results?

In this article, we describe an experiment conducted with ChatGPT-4 to see whether it might be a useful tool to help researchers assess an existing navigation structure mimicking a tree test.

Tree Testing

Tree testing is a popular UX research method often used to test how well participants can navigate an existing or proposed website information architecture or a software product’s menu structure.

In a tree test, participants are asked where they would find content or features in a “tree” (which gets its name because initial tree tests were conducted using the Windows Explorer file tree).

Tree testing is often thought of as reverse card sorting because instead of creating a structure to place items into, participants specify where they expect an item to be placed in an existing structure.

Tree testing can also be thought of as a specialized usability test where only the labels and structure are being tested, so the output looks like a usability test with task-based metrics such as completion rate (called findability rate), perceived ease, and completion time. A researcher can use the output of the average data from participants to understand the strengths and weaknesses of a navigation structure.

Card sorting and tree testing are similar in that they are methods for studying navigation structures, but there is an important difference. In card sorting, the key output is the grouping and naming of categories done by a researcher. Although participants are involved in providing their input on how to sort the cards, the results are ultimately based on the judgment of the researcher who assembles the structure.

In contrast, the outputs of a tree test are the task-based metrics obtained directly from participant performance. Although ChatGPT might be able to emulate human judgment from a card sort, we were a bit more skeptical of how well it would emulate participant performance when traversing a navigation structure like a typical participant in a tree test. But why speculate when you can test?

Tree Testing Comparison Study

To assess ChatGPT-4’s ability to mimic participant behavior in a tree test, we first needed to identify an existing navigation structure to test.

In our card sorting analysis, we used items from the Best Buy website, which are concrete and arguably easier to categorize by both humans and ChatGPT. In this study, we wanted to use a more abstract tree structure, but one that could still be relevant to a large population. We selected the Internal Revenue Service website (IRS.gov), because what could be more abstract than the U.S. Tax Code?

Tasks

To assess the IRS navigation structure, we created the following ten tasks that asked participants to locate items in different parts of the tree structure (Table 1).

| Task | Description | Correct Path |

|---|---|---|

| 1 | Where would you go to find out if you can pay your taxes with PayPal? | Pay > PAY BY > Debit or Credit Card |

| 2 | Where would you go to find information about the form you would need if you were a worker that had Income and Social Security withheld? | Forms & Instructions > POPULAR FORMS & INSTRUCTIONS |

| 3 | Where would you go if you were expecting a tax refund and had not yet received it? | Refunds > Where’s my refund |

| 4 | Where would you go to get more information on possible consequences for not paying your taxes? | Pay > POPULAR > Penalties |

| 5 | Where would you go to find out if your electric vehicle could save you any money on your taxes? | Credits & Deductions > POPULAR > Clean Energy and Vehicle Credits |

| 6 | Where would you go to learn about how to file taxes for an LLC? | File > INFORMATION FOR… > Businesses & Self-employed |

| 7 | Where would you go to learn how to update your address? | File > FILING FOR INDIVIDUALS > Update your Information |

| 8 | Where would you go to learn about how children can reduce your taxes? | Credits & Deductions > POPULAR > Child Tax Credit |

| 9 | Where would you go to find information on how much you can contribute to your 401(k)? | Credits & Deductions > POPULAR > Retirement plans |

| 10 | Where would you go to find information on past-due child support that may impact your refund? | Refunds > Reduced Refunds |

Table 1: Tree tasks and correct paths.

Each task has a correct path. Some tasks were more readily solvable by referring to the text within the tree structure, while others required some inference. For example, Task 5 could be found by almost directly referencing the topic of an electric vehicle (Credits & Deductions > POPULAR > Clean Energy and Vehicle Credits), whereas Task 1 required some inference from the user as information about using PayPal is only mentioned on the web page itself (Pay > PAY BY > Debit or Credit Card).

ChatGPT

We converted the IRS tree into a ChatGPT prompt and then asked ChatGPT to specify how it would navigate to find each of the ten task targets. We asked ChatGPT to take its best guess as to where the information was located (see the prompts in the appendix at the end of this article). We also asked it to use the Single Ease Question (SEQ®) to rate how difficult an average person would think it was to find each topic. We repeated this process five times with the same prompt to assess consistency, as ChatGPT responses include a degree of randomness.

One concern we had was that ChatGPT would have immediate access to the entire tree at once whereas participants had to traverse the tree, uncovering categories and nodes one at a time (see Video 1). For the tasks we selected, however, the answers weren’t obviously contained within the tree. For example, there wasn’t a node in the tree that says, “Pay with PayPal” (an obvious answer to Task 1). This means that both humans and ChatGPT would have to make inferential leaps as to where the right answers were.

Video 1: Video of participant working on the tree test task with the MUiQ® platform.

Participants

To compare ChatGPT’s results to how people would perform, we created a tree test study on our MUiQ platform. We invited 33 members of an online U.S.-based panel to participate in February 2024.

Study Results

To assess the comparability in performance, we compared success rates and paths, perceived ease (SEQ), and which task was considered the hardest.

Task Success

ChatGPT performed quite well at locating items, finding the correct path for all tasks in one iteration and nine of the ten tasks in four iterations (missing Task 9, “Where would you go to find information on how much you can contribute to your 401(k)?”).

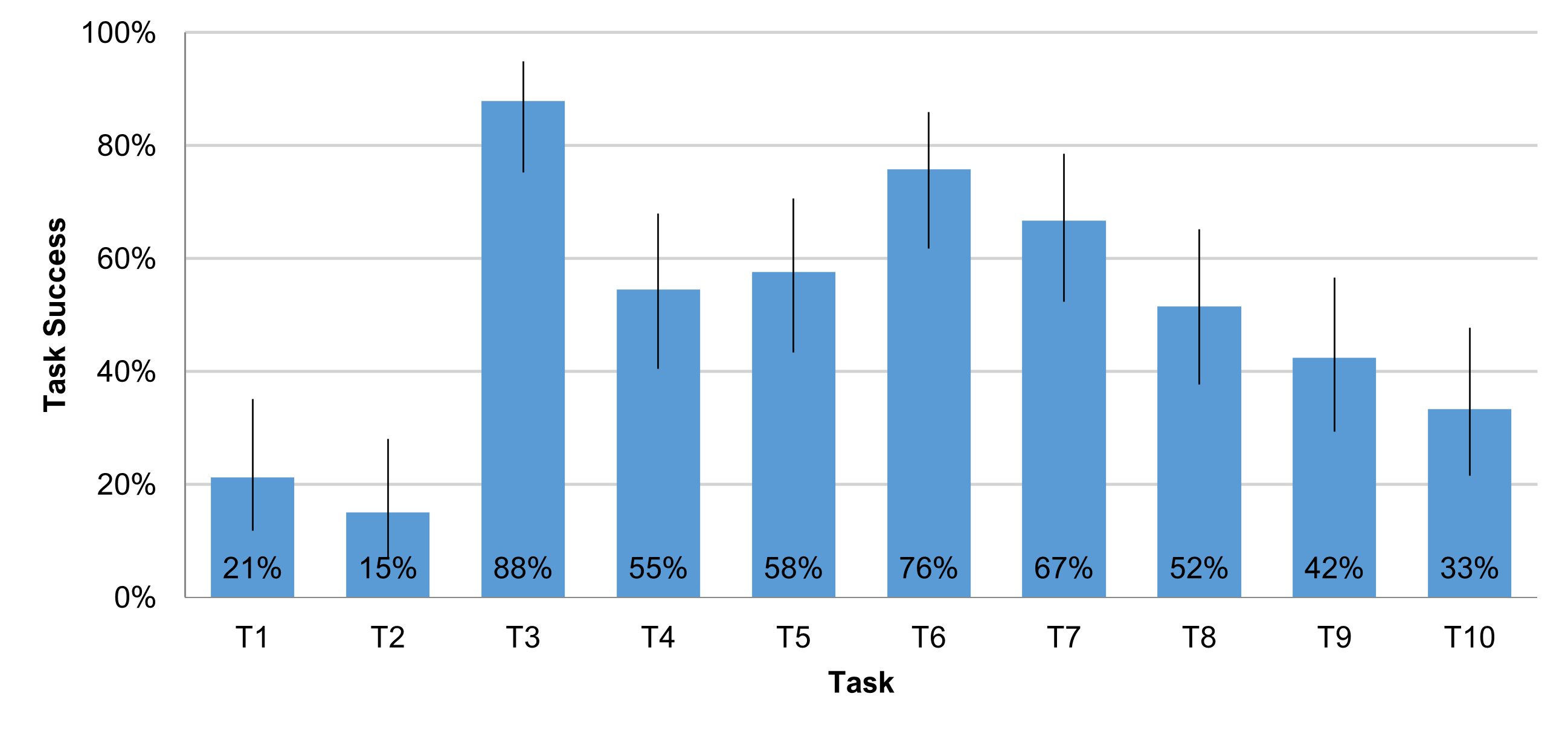

How did participants perform? Much worse—about 51% successful task completions (across the ten tasks). Figure 1 shows the successful task completion/findability rates for the 33 participants. None of the participants got all tasks correct. Half of the participants got at least 6/10 tasks correct. One participant got 9/10 correct and one got them all wrong.

Figure 1: Task completion rates for the human participants (n = 33).

Participants had the highest success rate with Task 3 (88%; “Where would you go if you were expecting a tax refund and had not yet received it?”) and the lowest with Task 2 (15%; “Where would you go to find information about the form you would need if you were a worker that had Income and Social Security withheld?”).

Task Ease

After determining that ChatGPT didn’t mimic human search performance well (it significantly outperformed), we next assessed how well it could “empathize” with how difficult a task would be to potential participants.

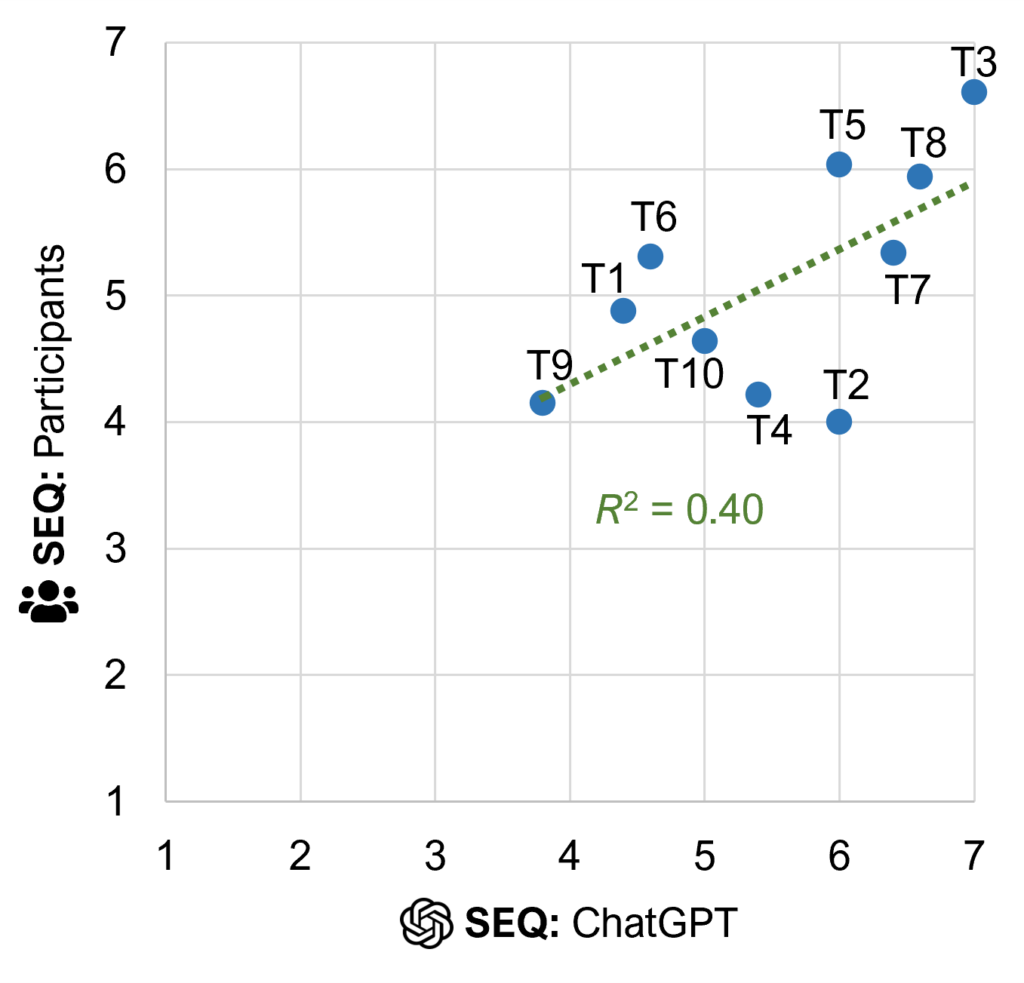

Here we actually found reasonably good predictions (Figure 2). Across the five conversations and ten tasks, ChatGPT’s average SEQ scores strongly correlated with participants’ scores (r(8) = .62, p = .06).

Figure 2: Scatterplot of SEQ means for ChatGPT iterations and participants.

Across the ten tasks, ChatGPT’s average SEQ score was 5.5, and the participants’ average was 5.1 (a difference of 0.4). The mean absolute difference across the tasks was 0.7. In other words, ChatGPT seems able to “predict” how difficult people will think a task is.

Another way to assess the alignment of ChatGPT’s and participants’ SEQ means at the task level is to examine the results using the adjective scale for interpreting SEQ scores. This helps the interpretation by putting words to the numbers.

Table 2 shows the mean SEQ scores for the participants and ChatGPT runs matched to their calibrated adjectives. The lowest SEQ score is associated with the description “Hardest Imaginable” (less than 2.7) to the highest score description “Easiest Imaginable” (anything 6.5 or greater on the seven-point scale). For example, on Task 1, the participants’ average score of 4.9 falls within the “Easy” category and so does the 4.4 from the ChatGPT runs. For the ten tasks, there were six direct matches (Tasks 1, 3, 5, 6, 9, and 10), three close matches (adjacent adjectives for Tasks 4, 7, and 8), and one difference (nonadjacent adjectives for Task 2).

| Task | SEQ: Participants | SEQ: ChatGPT | |Difference| | Adj: Participants | Adj: ChatGPT |

|---|---|---|---|---|---|

| 1 | 4.9 | 4.4 | 0.5 | Easy | Easy |

| 2 | 4.0 | 6.0 | 2.0 | Difficult | Very easy |

| 3 | 6.6 | 7.0 | 0.4 | Easiest imaginable | Easiest imaginable |

| 4 | 4.2 | 5.4 | 1.2 | Difficult | Easy |

| 5 | 6.0 | 6.0 | 0.0 | Very easy | Very easy |

| 6 | 5.3 | 4.6 | 0.7 | Easy | Easy |

| 7 | 5.3 | 6.4 | 1.1 | Easy | Very easy |

| 8 | 5.9 | 6.6 | 0.7 | Very easy | Easiest imaginable |

| 9 | 4.2 | 3.8 | 0.4 | Difficult | Difficult |

| 10 | 4.6 | 5.0 | 0.4 | Easy | Easy |

Table 2: SEQ means and adjective descriptions for ChatGPT and tree test participants.

We also compared participant task completion and SEQ ease scores, finding a strong correlation for data averaged across tasks (r(8) = .71, p = .02). This suggests that participants’ perception of task difficulty tended to match their performance (consistent with our 2009 large meta-analysis of prototypical usability metrics).

Most Difficult Task

After finishing the tree test, participants selected the task they thought was the most difficult to complete. The most frequently chosen task was finding information on contribution limits for a 401(k) (Task 9). Interestingly, Task 9 was the task that ChatGPT got wrong in most iterations; this may suggest that ChatGPT would struggle in the same situations as real people.

Summary and Discussion

Based on data collected with multiple iterations of ChatGPT and 33 participants finding the location of target items in a tree structure based on the IRS website and using the SEQ to assess perceived task difficulty, we found:

ChatGPT’s findability performance matched or exceeded the best participants. ChatGPT had almost perfect task completion scores, while the mean successful task completion rate across the ten tasks for participants was about 50%. The best-performing participant got nine of the ten tasks correct, performing at the 97th percentile (1/33). This suggests that ChatGPT is not suitable for estimating how well humans will find items in a tree test.

If ChatGPT can’t find it, you probably have a problem. Our findability results suggest you can think of ChatGPT as a sort of power/expert user (performing at or above the 99th percentile). If ChatGPT has problems finding an item in a tree, then it’s likely that a typical user would as well.

ChatGPT predicted people’s ease ratings of the search tasks with reasonable accuracy. We were surprised by how well ChatGPT’s assessment of task difficulty matched actual participants’ SEQ ratings. Across tasks, the correlation was high (r = .62), and the difference in overall mean ratings was small (.04), with nine out of ten tasks having the same or close adjective description for the difficulty. This indicates that ChatGPT may be suitable for estimating the participants’ ratings of task ease.

Tree order may matter. There is some research in large-language models (LLMs) such as ChatGPT-4 that results may be impacted by the order in which information is fed into the model. For a quick check, we moved the “Credits & Deductions” branch to be first in the tree structure prompt and reran Task 9. ChatGPT then got Task 9 correct in four out of five runs (instead of one out of five runs). We don’t know if the order would also change the frequency with which participants select Task 9 as the most difficult task or if it would change any other aspect of participants’ experiences navigating the tree. Our findings with the current IRS tree structure demonstrated that, overall, ChatGPT outperforms almost all participants in identifying the correct terminal. This finding doesn’t change that overall conclusion.

When conducting research with ChatGPT, do not trust the outcome of a single iteration. There is an argument that it isn’t possible to conduct meaningful research comparing the performance of ChatGPT with UX research conducted on humans (participants) by humans (UX researchers). We disagree with that extreme position, but because ChatGPT outputs are not deterministic, it’s important to check its consistency with multiple iterations (in this research, we ran each prompt five times). For example, the first SEQ rating produced by ChatGPT for Task 9 was 6 (very easy), but after five iterations the average was 3.8 (difficult).

Task appropriateness may matter. Task 2 (“Where would you go to find information about the form you would need if you were a worker that had Income and Social Security withheld?”) was the most difficult task for participants to complete, but ChatGPT solved this task correctly five out of five times. This task was, however, potentially problematic because it applies more to employers than employees, making it difficult for the participants who as a group probably had little experience as employers. ChatGPT’s success might have been due to connections in its language model between words in the task (e.g., “withheld”) and the terminal in the tree.

Good luck with your taxes this year.

Appendix: Prompts

Prompt 1: The Tree Structure

Imagine you’re on the IRS website, navigating the menu while looking for some information. Learn this website structure, as I will ask you to complete navigation tasks. Use this structure as if you were a real person navigating a real menu and provide the path that you would click through to find information on each task.

MENU STRUCTURE:

File

INFORMATION FOR…

Individuals

Business & Self Employed

Charities and Nonprofits

International Taxpayers

Federal State and Local Governments

Indian Tribal Governments

Tax Exempt Bonds

FILING FOR INDIVIDUALS

How to File

When to File

Where to File

Update Your Information

POPULAR

Get Your Tax Record

Apply for an Employer ID Number (EIN)

Check Your Amended Return Status

Get an Identity Protection PIN (IP PIN)

File Your Taxes for Free

Pay

PAY BY

Bank Account (Direct Pay)

Debit or Credit Card

Payment Plan (Installment Agreement)

Electronic Federal Tax Payment System (EFTPS)

POPULAR

Your Online Account

Tax Withholding Estimator

Estimated Taxes

Penalties

Refunds

Where’s My Refund

What to Expect

Direct Deposit

Reduced Refunds

Amend Return

Credits & Deductions

INFORMATION FOR…

Individuals (For you and your family)

Business & Self-Employed (Standard mileage and other information)

POPULAR

Earned Income Credit (EITC)

Child Tax Credit

Clean Energy and Vehicle Credits

Standard Deduction

Retirement Plans

Forms & Instructions

POPULAR FORMS & INSTRUCTIONS

Form 1040 (Individual Tax Return)

Form 1040 Instructions (Instructions for Form 1040)

Form W-9 (Request for Taxpayer Identification Number (TIN) and Certification

Form 4506-T (Request for Transcript of Tax Return)

Form W-4 (Employee’s Withholding Certificate)

Form 941 (Employer’s Quarterly Federal Tax Return)

Form W-2 (Employers engaged in a trade or business who pay compensation)

Form 9465 (Installment Agreement Request)

POPULAR FOR TAX PROS

Form 1040-X (Amend/Fix Return)

Form 2848 (Apply for Power of Attorney)

Form W-7 (Apply for an ITIN)

Circular 230 (Rules for Governing Practice before IRS)

ChatGPT: [response]

Prompt 2: The Tasks

Take your best guess at where each piece of information is located, even for paths that you are not certain about. You should have a path for every task. Represent the path by including a > in between each step.

Your tasks are as follows:

- Where would you go to find out if you can pay your taxes with PayPal?

- Where would you go to find information about the form you would need if you were a worker that had Income and Social Security withheld?

- Where would you go if you were expecting a tax refund and had not yet received it?

- Where would you go to get more information on possible consequences for not paying your taxes?

- Where would you go to find out if your electric vehicle could save you any money on your taxes?

- Where would you go to learn about how to file taxes for an LLC?

- Where would you go to learn how to update your address?

- Where would you go to learn about how children can reduce your taxes?

- Where would you go to find information on how much you can contribute to your 401(k)?

- Where would you go to find information on past-due child support that may impact your refund?

ChatGPT: [response]

Prompt 3: Task Difficulty Assessment

Great, now for each of the tasks you completed, indicate how difficult an average person would think it was to find each topic from 1-Very difficult to 7-Very easy.

ChatGPT: [response]