ChatGPT is probably not going to take your UX research job. However, another researcher who can use AI tools such as ChatGPT effectively might.

ChatGPT is probably not going to take your UX research job. However, another researcher who can use AI tools such as ChatGPT effectively might.

In an earlier article, we explored using ChatGPT-4 to augment researchers’ abilities to sort open-ended comments. We found that the results were comparable to how humans performed, although they had some shortcomings.

We wanted to continue exploring AI’s capabilities by applying it to other UX research methods. Although we are a bit skeptical that AI can identify usability problems on a website, we thought an activity like card sorting might be well suited for AI. Because large language models (LLMs) like ChatGPT are trained on many examples of language usage, it seems plausible that ChatGPT can generate categories for lists of items based on their similarities.

Card sorting is a popular UX research method often used to restructure the navigation (information architecture) of a website’s or software’s menu structure. Before the widespread availability of computers, the items to be sorted were printed on physical cards. There are two types of card sorts: closed (researchers specify the groups into which items can be sorted) and open (participants determine the groups into which they sort items).

In a modern open card sort, participants use a card sorting program to organize a list of items into groups and then name the groups. This type of card sorting provides quantitative outputs (a similarity matrix and dendrogram) and qualitative outputs (lists of categories and reasons for sorting/naming).

Unfortunately, these quantitative and qualitative outputs are not deterministic and can only guide researchers to their conclusions. Participants will inevitably disagree on which cards belong together and what to name categories. Participants in card-sorting studies aren’t trained taxonomists; instead, they rely on their own experiences and other websites’ conventions, often leading to unhelpful categorizations. Consequently, extracting insights from open card sorting requires a fair amount of judgment from researchers.

In this article, we describe an experiment conducted with ChatGPT-4 to see whether it might be a useful tool to help researchers in their analysis of results from open card sorts.

Card Sorting Comparison Study

To assess ChatGPT-4’s ability to generate card sorting results comparable to humans, we needed a good comparison derived from actual participants. We used the card sorting items and results from an earlier study in which participants sorted items that were text-only, image-only, or image+text. In that earlier study, we used 40 items pulled from the Best Buy website and had 623 participants sort the items and name the categories (an open card sort). For this comparison study, we focus only on the 200 responses we received for the text-only items.

We provided ChatGPT with the same list of 40 items in a randomized order and asked it to sort them using as many categories as it wanted and to describe the categories (see Prompt 1 in Appendix A).

ChatGPT Results

Figure 1 shows the dendrogram from the earlier analysis and the categories generated by ChatGPT-4.

Figure 1: (a) Dendrogram from card sort and (b) ChatGPT-4 categorization of the 40 items.

To compare the results, we looked at the number of categories used, the category names, and the percentage of times items were placed in the same group in both sorts (human participants and ChatGPT).

Number of Categories Aligned Well

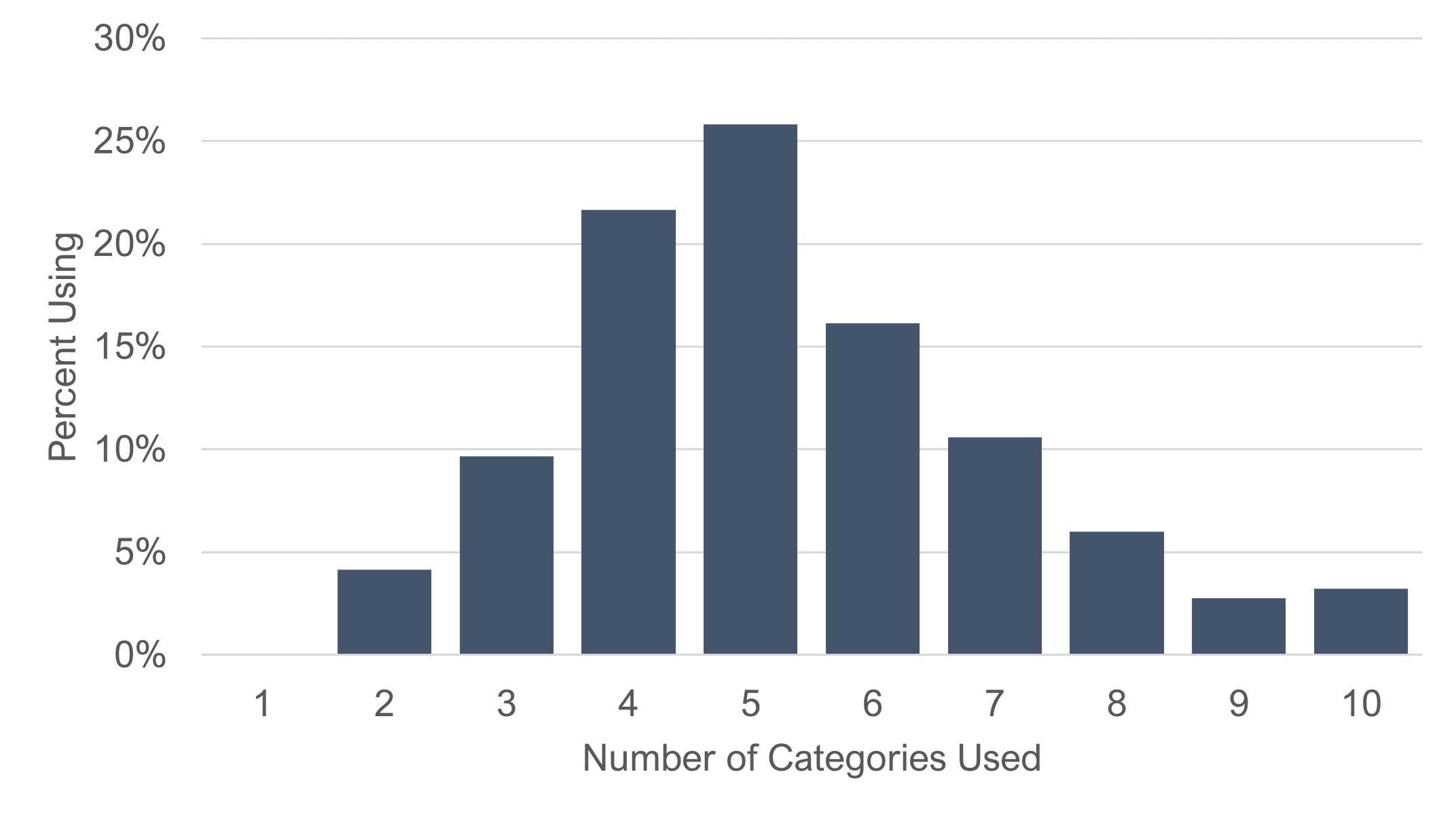

ChatGPT used five categories to sort the 40 items based on the “primary function or usage of each item.” In the card sorting exercise, about 25% of the 200 participants also sorted the items into five groups, which was the mode of the distribution shown in Figure 2.

Figure 2: Number of categories used by the 200 participants when sorting the 40 items.

Categories Named Appropriately

The category names generated by the participants tended to be single words (e.g., Kitchen, Appliances, Electronics). But in an open card-sort analysis, researchers don’t just default to what participants provide. Instead, researchers must use the most common names along with other taxonomic strategies, such as combining the top categories associated with the most cards grouped together. We first split the 40 items into five categories based on their clustering in the dendrogram and then reviewed the user-generated categories for each item.

For example, the first category contained kitchen-related products, and the top two most common categories provided by participants were “Kitchen” and “Appliances.” ChatGPT called this category “Kitchen Appliances.”

Using the two most common user-generated categories, we derived names for the remaining four groups. Two of the user-generated categories had very similar names (Electronics and Computer), so we differentiated these two categories based on other category suggestions. Table 1 shows the user-generated (researcher-synthesized) and ChatGPT category names.

| Synthesized from Most Common User Group Names | ChatGPT Category Names |

|---|---|

| Kitchen Appliances | Kitchen Appliances |

| Home Appliances | Household Utilities |

| Bathroom & Personal Care | Personal Gadgets & Accessories |

| Electronics & Entertainment | Home Electronics & Entertainment |

| Computer & Electronics | Office & Computing Accessories |

Table 1: Researcher synthesized and ChatGPT generated category names.

Good Agreement Between Card Sorting and ChatGPT Assignment of Items to Groups

To determine the similarity of item grouping by participants and ChatGPT, we first determined the best alignment of the five groups in the card sort categorization (based on inspection of the dendrogram) and the ChatGPT assignments by maximizing the number of items in common.

For example, the card sort results and ChatGPT had nine items in common in their respective Kitchen Appliances categories. The only discrepancy was ChatGPT’s assignment of one item (Refrigerator) to a different category (Household Utilities), so there was a 90% overlap (9/10). In contrast, as shown in Table 2, the fifth card sort group had a 44% (4/9) overlap with ChatGPT.

| Category Match | Item in 5th Group | Participant Category Name 1 | Participant Category Name 2 | ChatGPT Category |

|---|---|---|---|---|

| 1 | Flash drive | Electronics | Computer | Office & Computing Accessories |

| 0 | Laptop | Electronics | Computer | Personal Gadgets & Accessories |

| 1 | Keyboard | Electronics | Computer | Office & Computing Accessories |

| 0 | Headphones | Electronics | Entertainment | Personal Gadgets & Accessories |

| 1 | Mouse | Electronics | Office | Office & Computing Accessories |

| 0 | Tablet | Electronics | Technology | Personal Gadgets & Accessories |

| 1 | Printer | Electronics | Computer | Office & Computing Accessories |

| 0 | Cell phone | Electronics | Technology | Personal Gadgets & Accessories |

| 0 | Fitness Tracker | Electronics | Personal Care | Personal Gadgets & Accessories |

Table 2: Category matching (Column 1) for the fifth group that we named Computer & Electronics based on the card sort (Columns 3 and 4 show the first and second most commonly used category names for each item). Column 5 is the ChatGPT category name for each item.

After performing this process across all 40 items and five groups, the overlaps by group (keyed to the card sort groupings) were:

- Kitchen Appliances: 9/10

- Home Appliances: 4/9

- Bathroom & Personal Care: 5/5

- Electronics & Entertainment: 3/7

- Computer & Electronics: 4/9

The overall match rate (number of items matched in groups divided by the total number of items) was 63% (25/40).

Because ChatGPT output is nondeterministic, we generated two additional sets of ChatGPT responses using different prompts (see Appendix A). We noticed a few things in the additional runs. In the second run, ChatGPT inexplicably left off one of the 40 items (Trimmer). In the third run, ChatGPT generated six categories, one with a “Miscellaneous” label.

Despite these discrepancies, the category match rates were similar across all three runs. After repeating the matching exercise for the two additional groupings, we found respective match rates of 77% and 65%—an overall average match rate of 68% across the three runs.

As shown in Appendix B, the expected match rate by chance is 21%, so the value of kappa across these three runs is .60. Kappa, a measure of chance-corrected agreement, can take values between −1 and 1 and is often interpreted with the Landis and Koch guidelines (poor agreement: ≤ 0, slight: 0.01–0.20, fair: 0.21–0.40, moderate: 0.41–0.60, substantial: 0.61–0.80, almost perfect agreement: 0.81–1.00). According to these guidelines, the interrater agreement between card sorting and ChatGPT in this study was on the border between moderate and substantial agreement.

Summary and Discussion

Our comparison of ChatGPT’s ability to sort items into groups and to appropriately name the groups with the groups synthesized by human researchers from a standard open card sort found:

There was a strong similarity in numbers and names of categories. When unconstrained, ChatGPT classified the 40 items into five categories, consistent with the modal number of categories produced by participants in the card-sort study. The wording of ChatGPT category names was appropriate (e.g., Kitchen Appliances, Home Electronics & Entertainment) and consistent with those synthesized by the card sort researchers.

Items matched most of the time. The alignment of categories and items within categories was not perfect between the card sort and ChatGPT classifications, but given a match rate of 68%, items were more likely than not to be placed in the same category.

The interrater reliability between the two methods was on the border between moderate and substantial agreement. The chance expectation for agreement given the conditions in this study was 21%, much lower than the observed mean match rate of 68%. The resulting value of kappa, a measure of chance corrected agreement, was .60, interpreted as bordering moderate and substantial agreement.

There weren’t any obviously bad ChatGPT placements. We’ve certainly seen some questionable categorization and names from participants in card sort studies, especially when there are many cards using abstract concepts. Although there was some disagreement between ChatGPT and the card sort groupings, ChatGPT didn’t make any obviously bad decisions like putting “Refrigerator” in “Office and Computer Accessories.”

ChatGPT classification is a low-effort method. Relative to the effort required to conduct and analyze an open card sort, it’s very easy to prompt ChatGPT to organize and classify a set of items. This makes it an excellent way for UX researchers to create a first draft of the organization and classification of items in an information architecture, either before or after collecting card-sort data.

ChatGPT classification does not require human participants. One of the original goals of card sorting was to investigate human mental models of item classification, either for pure research or to guide the design of information architectures (Tullis, 1985). It is possible to think of a large language model, which at its heart is a model of the next most likely word driven by deep neural networks, as also being a type of mental model based on enormous amounts of human-generated text. Even though ChatGPT classification does not directly involve human participants engaged in a sorting activity, its architecture appears to allow it to produce similar outputs.

ChatGPT classification might not work as well with less common items in specialized domains. The strength shown by ChatGPT in classifying common items might lead it to work more poorly with uncommon items. That limitation might be overcome by creating a custom GPT model, but that is only practical when there is enough data to build a good model (according to OpenAI, “billions of tokens at a minimum”).

Future research: Now that systems like ChatGPT are becoming more sophisticated, we anticipate an explosion of research exploring its capabilities and limitations as a part of the UX researcher’s toolkit. For example, it would be interesting to compare the results of tree tests in which human participants navigate information architectures based on card sorting versus ChatGPT generation.

Appendix A

This appendix documents the ChatGPT prompts used in this research.

Prompt 1

Prompt (We asked ChatGPT):

“I want you to take the perspective of a shopper on a consumer website. You should follow my instructions as if you were fulfilling this role. For context, I’m seeing how you would complete this task, which is a card sort task.

“Consider the following list of items and how you would best group them. Create as many groups as you deem appropriate, and give them descriptive names. Then, give me the groups and how you would categorize each item.”

[list of 40 items from Figure 1a dendrogram]

ChatGPT (Responded):

“For this card sort task, I would create groups based on the primary function or usage of each item. Here are the groups I propose along with the categorization of each item:”

[grouped item list shown in Figure 1b]

“Each item is placed in a group where its primary use or functionality aligns best. For example, the ‘Kitchen Appliances’ group contains items predominantly used in the kitchen for cooking or food preparation. ‘Home Electronics & Entertainment’ includes items related to household comfort and entertainment. ‘Personal Gadgets & Accessories’ are items used on a personal level, often portable. ‘Office & Computing Accessories’ are items commonly used in conjunction with computers or in office settings. Finally, ‘Household Utilities’ includes larger home appliances and items for general household use.”

Prompt 2

Prompt (We asked ChatGPT):

“I want you to take the perspective of a shopper on a consumer website. You should follow my instructions as if you were fulfilling this role. For context, I’m seeing how you would complete this task, which is a card sort task.

“Consider the following list of items and how you would best group them. Create between 3 to 5 groups as you deem appropriate and give them descriptive names. Then, give me the groups and how you would categorize each item.”

[list of 40 items from Figure 1a dendrogram]

ChatGPT (Responded):

“To organize these items into groups, I will consider their primary function and usage context. Here’s how I would categorize them into five groups:”

[list of items placed in groups]

“This categorization considers the primary use of each item, grouping them into intuitive and contextually relevant categories.”

Prompt 3

Prompt (We asked ChatGPT):

“Group the following items into categories.”

[list of 40 items from Figure 1a dendrogram]

ChatGPT (Responded):

“The items you’ve listed can be categorized based on their primary use or function. Here’s a possible grouping:”

[list of items placed in groups]

“This categorization is based on the primary function of each item. Some items might fit into multiple categories depending on their features or how they are used.”

Appendix B

This appendix documents the computation of kappa from match rates.

Kappa is a commonly used measure of interrater reliability that corrects for chance agreement in classification. The basic formula for kappa is k = (po − pe)/(1 − pe), where po is the observed magnitude of agreement between two raters (in this study, the match rate) and pe is the expected magnitude of agreement by chance. To use this formula to compute kappa for match rates, we need to compute the magnitude of pe.

From the card sort results, we had five categories with different numbers of items in each category. We can use simple probabilities to compute the expected number of matches by chance for each group, then sum those for an overall expected match rate, as shown below.

| Group | Size | pe | ne | no | po |

|---|---|---|---|---|---|

| A | 10 | 0.25 | 2.5 | 9 | 0.90 |

| B | 9 | 0.23 | 2.025 | 4 | 0.44 |

| C | 5 | 0.13 | 0.625 | 5 | 1.00 |

| D | 7 | 0.18 | 1.225 | 3 | 0.43 |

| E | 9 | 0.23 | 2.025 | 4 | 0.44 |

For example, when the size of a group is 10, the chance expectation of one of 40 items being randomly assigned to that group is 10/40 (25%), so the expected number of matches by chance is .25 × 10 = 2.5. Across the five groups, the expected number of matches by chance is the sum of the values in the ne column, which is 8.4, so the expected match rate by chance is 8.4/40 = 21%. The observed match rate is the sum of the values in the no column divided by 40, which is 25/40 = 63%. The corresponding value of kappa is (.63 − .21)/(1 − .21) = .53.