Benchmarking is an essential step in making quantitative improvements to the user experience of a website, app, or product.

Benchmarking is an essential step in making quantitative improvements to the user experience of a website, app, or product.

In an earlier article and course, I discussed the benefits of regularly benchmarking and it’s the topic of my forthcoming book.

While technology platforms like MUIQ have made unmoderated benchmarks popular, moderated benchmarks are still essential for benchmarking physical products, and desktop and enterprise software. It can still be a better option for websites and mobile apps.

Benchmarks are all about the data and you’ll want to ensure your procedures are properly in place to collect reliable and representative data. Before running your first participant, here are five things to do to prepare for a moderated benchmarking study.

1. Review the Study Script

Have the facilitators go through the script in detail to ensure they understand all the steps participants can possibly take with the interface. Facilitators should pay particular attention to points where they will need to probe or intervene and ensure they know how to score each task.

Tip: To collect more reliable task time metrics, we don’t recommend facilitators probe or interrupt the participant mid-task. Instead, save questions and probing points for between tasks or at the beginning or end of the study.

2. Check the Technology (Twice)

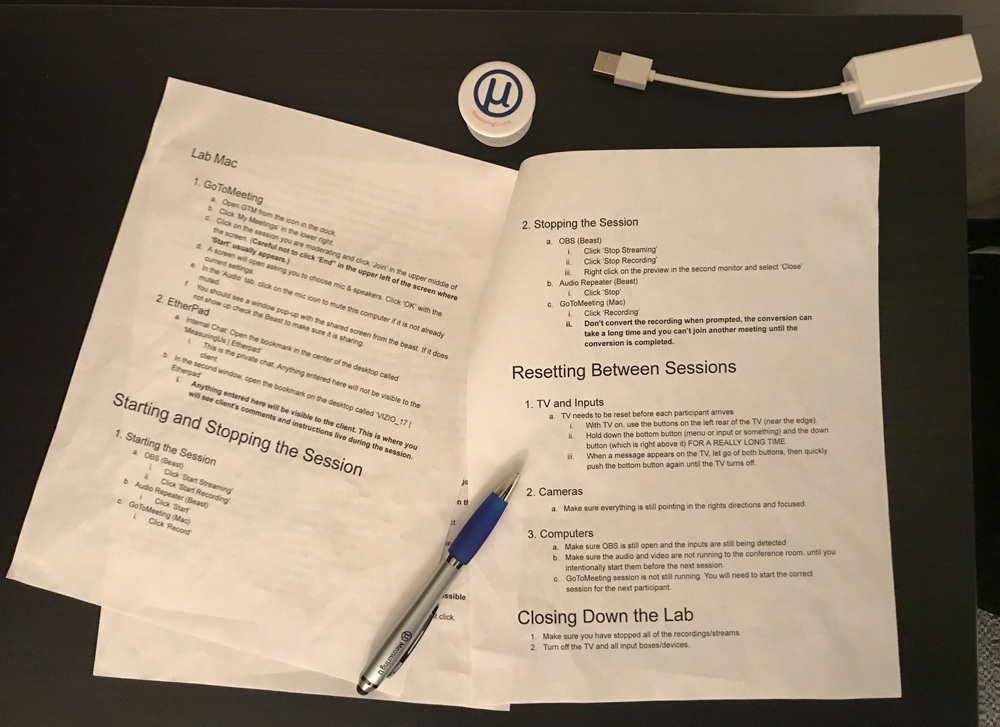

If technology always worked as planned, there wouldn’t be a need for so many benchmark studies! Murphy’s law definitely applies here. Like Santa, you’ll want to make a list and check it twice. Check and double-check your tech setup and plan for things to go wrong at the worst time during your study. We have a separate checklist for technology that includes points for:

- Session recordings: Be sure the screen recordings, and audio and backup recordings are working on site and remotely. Common Fail Point: Running out of space or untimely Windows updates! Check the space and force updates if you can.

- Observer access: If observers are watching (ideally in another room, not in the lab!) or remotely, ensure they can see and hear the participant and moderator. We run both GoTo Meetings and a live stream of the sessions. Common Fail Point: Muted microphones. Make sure to unmute them before the participant begins.

- Chatting: Ensure both observers and stakeholders can chat with the facilitator electronically (and not interrupt the participant). We have a separate real-time chat we use for facilitated sessions that allows for quick and discreet communication (but it has to be working and accessible to all involved). Common Fail Point: Observers not knowing the URL of the chat. Save the URL to calendar invites or bookmark the chat page.

- Data recording in place: Ensure the facilitator(s) is set for collecting metrics like task time, completion rates, and errors (in Excel or web form or on paper). If participants are answering rating scale data (e.g. the SUS) on a separate computer, laptop, paper, or verbally, check this is working. Common Fail Point: Not having an easy way for participants to respond to multi-point scales. Print out scales ahead of time.

- Interface/product tech check: Ensure whatever interface is being benchmarked (e.g. accounting software, a web application, remote control, or mobile device) works as planned and that any usernames and passwords are accessible and work! Common Fail Point: Constant product updates often affect critical steps in tasks and account credentials go stale. Test again an hour before the session.

Tip: Try to reduce the intrusion of monitoring equipment as there’s some evidence such equipment increases stress and even error rates.

3. Perform a Dry Run

Facilitators should conduct a dry run using an internal or throwaway participant (e.g. a colleague not involved in the study) to get a sense of how long the study will take and that the technology is working and not interfering with the interface or participant.

4. Run a Pilot

If possible, plan for a pilot as your first participant or two. Treat the pilot as you would a standard user and plan to debrief after the pilot to review the study script, identify any usability issues, and resolve any other potential study issues. If all goes well, these pilot participants’ data can be included in the full analysis. If you make significant changes to your study script, tasks, or facilitation style though, consider excluding pilot data from the analysis (or at least note the differences in your report).

5. Check In with Stakeholders

Address any questions or problems that come up from the pilot participant or technology. This can include any changes in task descriptions, success criteria, pre-and post-study questions, as well as the facilitation style of the moderator.

Summary

To make a moderated UX benchmark study more effective use a few pre-study steps: review the study script, check the tech, perform a dry-run, run a pilot, and check in with stakeholders. Formalizing these steps in a simple checklist (like the one here) helps ensure you’re consistent and minimize common fail points.