UX benchmarking is an effective method for understanding how people use and think about an interface, whether it’s for a website, software, or mobile app.

Benchmarking becomes an essential part of a plan to systematically improve the user experience.

A lot is involved in conducting an effective benchmark. I’m covering many of the details in a 4-week course sponsored by the UXPA this October 2017. More on benchmarking will be available in my forthcoming book, Benchmarking the User Experience.

To start, benchmarking the user experience effectively means first understanding both what benchmarking is, what the user experience is, and then progressing to methods, metrics, and analysis.

What Is User Experience?

Few things seem to elicit more disagreement than the definition of user experience and how it may or may not differ from user interface design or usability testing. While I don’t intend to offer an official definition (there’s some health in the debate), here’s the definition I use similar to Tullis & Albert: The user experience is the combination of all the behaviors and attitudes people have while interacting with an interface. These include but aren’t limited to:

- Ability to complete tasks

- The time it takes to complete tasks or find information

- Ability to find products or information

- Attitudes toward visual appearance

- Attitudes toward trust and credibility

- Perceptions of ease, usefulness and satisfaction

These are also many of the classic usability testing metrics, but include broader metrics dealing with attitudes, branding, loyalty, and appearance. As such, we borrow heavily from usability testing methods and terminology.

What Is Benchmarking and Why Do It?

A benchmark is a standard or point of reference against which metrics may be compared or assessed. This provides a good idea around its purpose. It has an interesting etymology: It originally comes from land surveyors who would cut a mark into a stone to secure a bracket called a “bench.” This would be used as a point of reference for building.

With computers, a benchmark is usually an evaluation that assesses the performance of software or hardware to set standards for future tests or trials to gauge performance against (such as CPU or database performance). Similarly, UX benchmarking involves evaluating an interface using a standard set of metrics to gauge its relative performance.

Figure 1: A mark used by surveyors to place the “bench” or leveling-rod for setting the correct elevation.

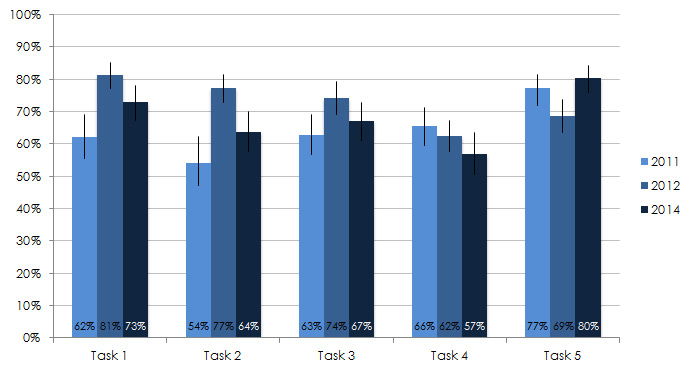

One of the hallmarks of measuring the user experience is seeing whether design efforts actually made a quantifiable difference over time. A regular benchmark study is a great way to institutionalize that. Benchmarks are most effective when done on a regular interval (e.g. every year or quarter) or after significant design or feature changes. Figure 2 shows the same tasks from an automotive website across three years: 2011, 2012, and 2014.

Figure 2: Completion rates for five tasks from a UX benchmarking study for an automotive website.

A good benchmark indicates where a website or product falls relative to some meaningful comparison. It can be compared to:

- Earlier versions of the product/website

- The competition

- Relative to an industry

- An industry standard (such as an NPS or conversation rate)

- Other products in the same company

What Can You Benchmark?

While just about anything can be benchmarked, the most common interfaces that have benchmark evaluations are:

- Websites (B2C & B2B): The shopping experience on Walmart.com, Costco, or GE.com

- Desktop software (B2C & B2B): QuickBooks, Excel, or iTunes

- Web apps: Salesforce.com or MailChimp

- Mobile websites: PayPal’s mobile website

- Mobile apps: Facebook, Snapchat, or a Chase mobile banking app

- Physical devices: Remote controls, in-car entertainment systems, or medical devices

- Internal apps within a company: Expense reporting applications or HR systems

- Service experiences: Customer support calls or out-of-the-box experiences (OOBE)

Two Types of Benchmarking Studies

There are essentially two types of benchmark studies: retrospective and task-based.

Retrospective: Participants are asked to recall their most recent experience with an interface and answer questions. We used this approach for the Consumer Software and Business Software Benchmark reports. With this approach, you don’t need access to the software but are limited to testing with only existing users who need to recall past actions (which can be fallible).

Task-Based: Participants are asked to attempt prescribed tasks on the interface, simulating actual usage in a controlled setting (sometimes also called a concurrent study). This is a common usability test setup and is what we use most often when working with clients. With this approach, you get more detailed task-interactions and can test with new and existing users, but need access to the software or apps and need to define tasks and success criteria.

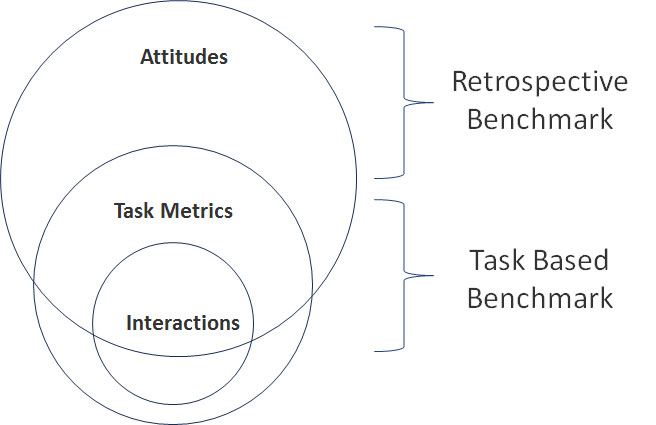

Retrospective and task-based studies focus on different experiences as shown in Figure 3.

Figure 3: Retrospective and task-based benchmark studies focus on different parts of the experience: existing attitudes about prior use (retrospective) and current attitudes and actions from interacting through simulated use (task-based).

UX benchmark studies fortunately can use a mix of retrospective and task-based studies. We take this approach whenever we can. We start by asking current customers to reflect on their experiences, and then ask a mix of new and existing customers attempt to complete tasks.

We used the mixed-approach with our Hotel UX benchmark study. Four hundred and five participants reflected on one of five hotel websites and answered a set of questions about the experience including the SUPR-Q (retrospective). Another 160 participants who had booked ANY hotel online were randomly assigned to complete two tasks on the same hotel websites (task-based or concurrent). This gave us a fuller picture of the user experience than if we had used only one type of study.

Different Modes of UX Benchmarking

When conducting a task-based UX benchmark, you need to choose between the different types of modes: moderated or unmoderated testing.

Moderated testing: Moderated testing requires a facilitator/moderator with the participant. Moderated testing can be conducted in-person or remotely using monitoring software such as GoToMeeting or WebEx.

Unmoderated testing: Unmoderated testing is similar to a survey. Participants essentially self-administer the study by following directions to answer questions and attempt tasks. Software, such as our MUIQ platform, Loop11, or UserZoom, help automate the process and collect a rich set of data including timing, clicks, heat maps, and videos. There are also some low-cost lower-tech solutions, such as using a survey platform like SurveyMonkey, to have participants complete tasks and then reflect on the experience (but at the cost of no automatically collected metrics and no videos).

While there are many advantages and disadvantages to moderated or unmoderated testing, the major difference is that unmoderated testing allows you to collect data from more participants in more locations quickly. You sacrifice the richness of a one- to-one interaction but often for many benchmarking studies, it’s worth sacrificing to get larger numbers.

Benchmark Metrics

Benchmarks are all about the metrics they collect. Benchmark studies are often called summative evaluations where the emphasis is less on finding problems but on assessing the current experience. That experience is quantified using both broader study-level metrics and granular task-level metrics (if there are tasks).

Study-Based Metrics

These metrics are typically collected at the end and or beginning of a study (either task-based or retrospective).

SUPR-Q: Provides a measure of the overall quality of the website user experience plus measures of usability, appearance, trust, and loyalty.

SUPR-Qm: A questionnaire for the mobile app user experience (in press).

SUS: A measure of perceived usability; good for software.

NPS: A measure of customer loyalty for all interfaces; better for consumer-facing ones.

UMUX-Lite: A compact measure of perceived usefulness and perceived ease (article forthcoming).

Brand attitude/brand lift: Brand has a significant effect on UX metrics. Measuring brand before and after a study helps identify how much the experience has (positive or negative) on brand attitudes.

Task-Level Metrics

For studies with tasks, the following are the most common metrics collected as part of the task, or after the task.

Attitudes: Perceptions of Ease (SEQ) and confidence (collected after the task)

Actions: Completion rates, task times, errors (collected from the task)

Summary

A UX benchmark provides a quantifiable measure of an interface such as websites, mobile apps, products, or software. A good benchmark indicates how the performance of the interface scores relative to a meaningful comparison from an earlier point in time, competition, or industry standard. Benchmarks can be retrospective (participants reflect on actual usage) or task-based (participants attempt tasks in simulated use). Collecting data for UX benchmarking involves the same modes as usability testing: moderated or unmoderated approaches. Benchmarking data should come at the study level (SUPR-Q, SUS, NPS) and if there are tasks, at the task level (completion rates, time, errors, SEQ).