There’s a continued need to measure and improve the user experience.

In principle, it’s easy to see the benefits of having qualified participants use an interface and measuring the experience to produce reliable metrics that can be benchmarked against.

But in practice, a number of obstacles make it difficult: time, cost, finding qualified participants, and even obtaining a stable product to test.

These challenges seem even more daunting in the B2B space where it’s hard to recruit specialized participants and to get access to enterprise environments. Yet we’ve found that these B2B interfaces are actually less usable and most in need of usability testing. For example, the average number of problems in B2B interfaces was almost twice as high compared to consumer software and a magnitude higher than websites.

While there are ways of mitigating each of these challenges, in our experience, many interfaces simply go without any testing at all, only perpetuating the problem. To provide practitioners with another option, I worked with Christian Rohrer at Capital One who created a method that provides a type of score to estimate the amount of “friction” a typical participant is likely to encounter while using an interface. The method is called PURE, or Practical Usability Rating by Experts, and is based on experts familiar with UX principles and heuristics, who rate a user experience based on a pre-defined rubric.

The PURE Method

While most UX methods—such as usability testing, card sorting, and surveys —are empirical, the PURE method is analytic. It’s not based on directly observing users, but instead relies on experts making judgments on the difficulty of steps users would take to complete tasks. It’s based on principles derived from user behavior. It’s the same idea behind expert reviews, guideline reviews, and Keystroke Level Modeling (KLM). An expert review (often loosely referred to as heuristic evaluations) is a popular usability method that involves multiple evaluators examining an interface against a set of rules (called heuristics). The result is a list of problems, or potential problems, users will likely encounter. Expert reviews tend to identify around 30% of the problems found from usability tests on the same interface.

Keystroke Level Modeling involves deconstructing tasks into smaller steps and then using pre-calibrated times to estimate how long it would take an expert user to complete the task error free. Applying the PURE method involves a hybrid of expert reviews and KLM.

To apply the PURE method, have two or more evaluators complete the following steps:

- Identify the target user or persona (very similar to an initial step in a usability test).

- Determine the critical or top tasks for this persona.

- Decompose each task into logical steps and the path(s) this typical user would take to complete the task. As with KLM, specify what steps your target user will take through the interface. There will likely be disagreement on what path users actually take; use data when available and document judgments.

- Independently score the difficulty/mental effort of each task step using a 3-point scale, referred to as the scoring rubric (shown in Figure 1).

| |

The step can be accomplished easily by the target user, due to low cognitive load or because it’s a known pattern, such as the acceptance of Terms of Service agreement. |

| |

The step requires some degree of cognitive load by the target user, but can generally be accomplished with some effort. |

| |

The step is difficult for the target user, due to significant cognitive load or confusion; some target users would likely fail the task at this point. |

Figure 1: Scoring rubric for PURE.

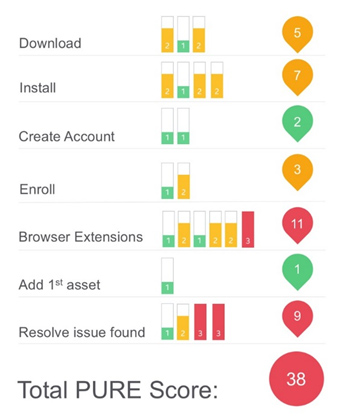

- A PURE score for a given task is the sum of the scores of all steps in that task. Reconcile differences in ratings between evaluators to agree on one rating (note the reasons for disagreement). The overall color for the task and the product is determined by the worst score of a given step within that task and product. For example, a single red step (rated 3) in a task causes that entire task and product to be colored red. The rationale for this is that no mature consumer product should have a step in which the target user is likely to fail a fundamental task. An example of a PURE scorecard for one task is shown in Figure 2.

Figure 2: Total PURE score for a product (38) and task-based scores (starting from “Download” to Resolve Issue found”).

Reliability & Validity

As with any measurement services, we assessed the reliability (consistency and agreement) and validity (how well the ratings predicted actual empirical data) of the PURE method.

We conducted a traditional benchmarking study on three consumer software products (15 moderated participants) and eight websites (202 unmoderated participants). Our primary metrics were the SUPR-Q, SUS, NPS at the study level and completion rate, task ease using the SEQ, and time on the task level.

Separately we had four evaluators go through an hour of training on the PURE method and then independently evaluate the software and websites (following steps 1 through 5) to create PURE scores.

To assess the reliability, we found the average correlation between evaluators (called inter-rater reliability) was r=0.5, suggesting reasonable agreement given the modest amount of training. More experienced teams conducting the method had higher agreement, with inter-rater reliability ranging between r=0.6 and 0.9.

The method also showed reasonable concurrent validity for some of the key benchmark metrics by exhibiting medium and statistically significant correlations. The correlation between task ease on the SEQ across products and websites was a r=0.5. The correlation with the SUS was r=0.5 and SUPR-Q was r =0.6. The correlations with task time and completion rates were not statistically different than 0. In short, the PURE metrics can explain (predict) around 25%-36% of the variation in the perception of task difficulty, overall usability, and the quality of the website user experience on some consumer software and websites. This is comparable to the 30% overlap in problems identified in expert reviews to usability tests.

Discussion

Like other analytic techniques in the UX methodology toolbox, the PURE method shows promise as a quick and reliable method for estimating some key benchmark metrics. Here are a few things to consider.

Not a substitute for usability testing: The PURE method is not a replacement for usability testing. In our experience, it’s ideally used for identifying low-hanging problems in an interface that’s unlikely to be tested with users (because of cost, priority, or difficulty testing it) and when a metric about the experience is needed for management.

Scale refinement: The 3-point scale used in the scoring rubric may need more points of discrimination as it’s often hard to pick between say a 2 and 3. Using the average of multiple evaluators as the PURE score does provide more points of discrimination, but may present too much variation for executives. We’ll continue to evaluate the rubric and scale.

Double experts help: As with heuristic evaluations, having judges who are versed in both interface design, and know the user and domain are likely to generate more reliable results.

Repeat for tasks and personas: For tasks with multiple paths and multiple personas, you may want to conduct multiple PURE evaluations and then compare or average the ratings.

We’ll continue to assess PURE’s validity and reliability between evaluators. If you’d like to give the PURE method a try or have questions, see our CHI paper for more background and contact us as we’re happy to share our experience and conduct a PURE evaluation for your product.