User experience research has a wide variety of methods. From one perspective, it’s good because there’s usually a method for whatever research question you need to answer. On the other hand, it’s hard to keep track of all these methods.

User experience research has a wide variety of methods. From one perspective, it’s good because there’s usually a method for whatever research question you need to answer. On the other hand, it’s hard to keep track of all these methods.

Some methods, such as usability testing, are commonly used and have been around for decades. Other more recent additions (such as click testing) are useful variations on existing methods.

Selecting the right UX method means narrowing down the methods to those that will address (1) the primary research goal and (2) the real logistics of the resources needed to implement the method. We’ll cover some strategies on how to select the right method in an upcoming article.

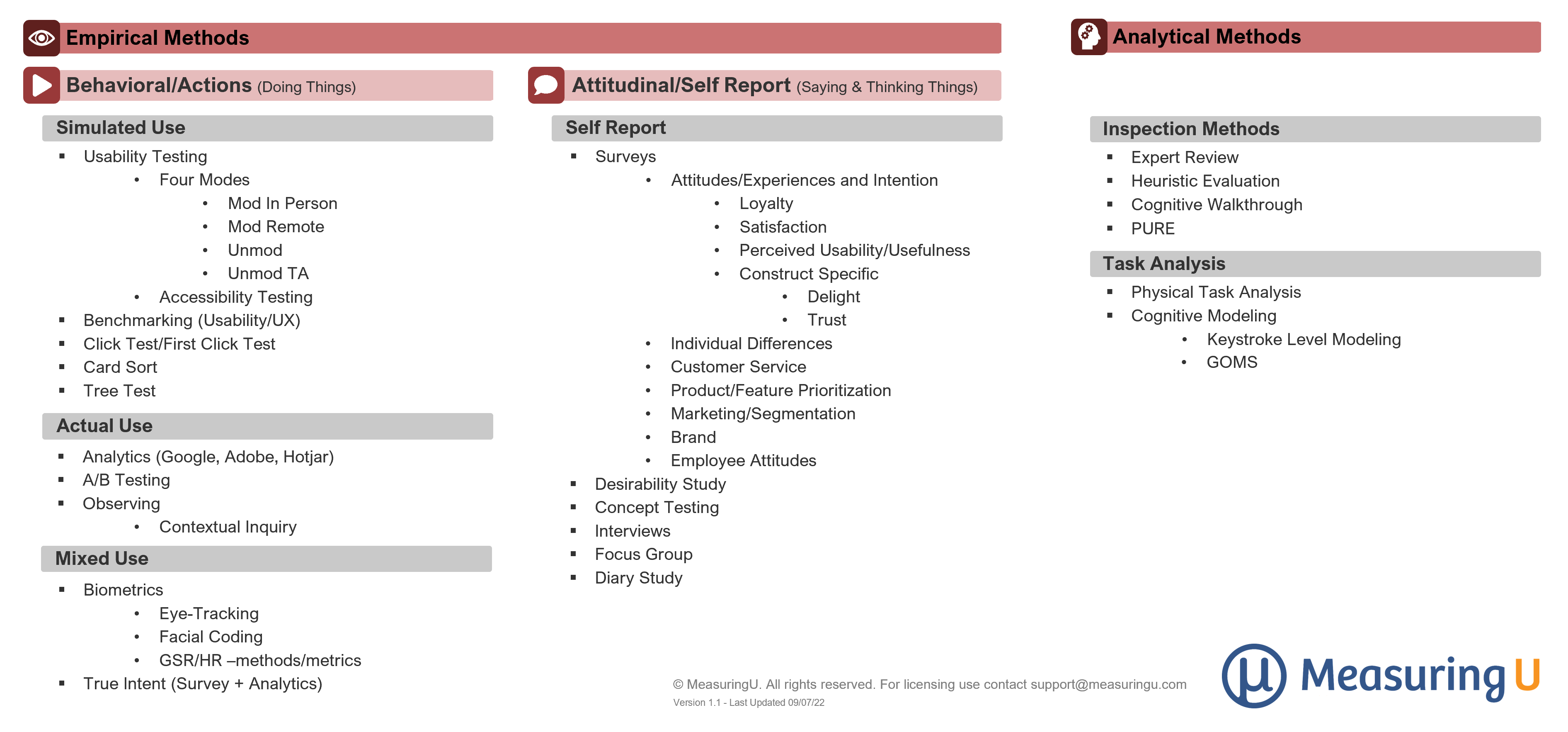

One of the first steps in understanding how to use UX methods is to have a broad understanding of how they relate. To a novice researcher, they may seem like a disjointed pile of methods, but there is some structure. In this article, we’ll discuss how we’ve grouped a set of common UX methods into a taxonomy, as shown in Figure 1.

Empirical Methods

Empirical Methods

The first branch in the taxonomy is between empirical and analytic methods.

Figure 1 shows that most UX methods are considered empirical—that is, you’re collecting data (e.g., observation, ratings, etc.) from people (users, customers, stakeholders, etc.). But it can be difficult or too expensive to find enough of the targeted participants (e.g., early-stage startup founders, investor relations managers at Fortune 500 companies). In those cases, you may consider analytic methods (discussed after empirical methods).

Empirical methods can be divided into more behavioral or attitudinal approaches.

Behavioral/Action Methods (Doing Things)

Behavioral/Action Methods (Doing Things)

Observing behaviors is what differentiates much of UX research from more traditional market research that has a strong focus on measurement of attitudes and self-reported behavior collected through surveys or interviews.

Behaviors can be observed through watching actual product usage, simulating usage, or a combination of the two.

Simulated Use

An airplane simulator provides a realistic environment for prospective and current pilots to hone their skills. It can also be a way to understand how trained professionals react to unexpected and stressful situations. In controlled simulations, a lot can be learned about potential life-threatening errors before they happen. The same idea applies to simulated use in UX research. We often need to simulate the environment and software setup. For example, we can’t have professionals who work in finance posting incorrect entries in their working payroll or ledger systems. We can’t easily ask consumers to make a large online purchase or perform other sensitive tasks like checking their credit or applying for a loan while being watched and/or recorded.

Usability Testing

One of the most common methods for simulating use to observe behaviors is usability testing. A usability test involves having representative participants attempt realistic tasks with a product or interface. An evaluator (researcher, designer, or stakeholder) looks for problems the users encounter. Usability testing that primarily focuses on problem identification is called formative. Usability testing that focuses more on performance (e.g., completion rates, task times) is called summative. When a human is part of the simulation, it’s called a Wizard of Oz study.

There isn’t just one type of usability testing. In an earlier article, we categorized usability testing into four modes:

• Moderated In-Person

A facilitator is co-located with the participant (often in a lab).

• Moderated Remote

The participant and facilitator are in different locations. Screen-sharing software (such as Zoom or WebEx) allows the facilitator to remotely watch the participant attempt tasks with software or a website and allows for probing on problems.

• Unmoderated

Software like MUIQ® administers tasks automatically to participants around the world. You can collect a lot of data quickly and for a fraction of the cost of moderated testing (in-person or remote). In many cases, you have a recording of the participant’s screen and webcam, but there’s no way to simultaneously interact with participants.

• Unmoderated Think Aloud

A special type of remote unmoderated testing where participants share their screen (and sometimes webcam) and think aloud while attempting tasks. This also requires software (e.g., MUIQ or Usertesting.com) and tends to be suited for smaller-sample unmoderated studies.

• Accessibility Testing

We’ve included accessibility testing under the category of usability testing to differentiate this form of assessing accessibility from analytic approaches that typically use guidelines (e.g., WCAG). Accessibility testing as an empirical method involves recruiting from one or more target populations (e.g., hearing, visual, or cognitive impairments).

Benchmarking

Benchmarking is a summative evaluation using a moderated or unmoderated approach. The primary focus is on how well users of a system perform tasks (e.g., software, website, or mobile app) for the purpose of comparison with previous versions of the system or its competitors. Retrospective UX benchmarking focuses on attitudinal measures (e.g., trust, appearance) and sometimes behavioral self-reports (e.g., behavioral intentions, usability issues).

Click Testing

Click testing is a specialized type of usability testing where images or simple prototypes can be evaluated well before a product has been developed. Participants are asked where they would click to find functions or content. Because the first click can be predictive of ultimate task success, a first-click study can be used to evaluate probable success of a large number of tasks.

Card Sorting

Card sorting is an activity where participants group content, concepts, features, or functions into groups (with one or nested levels). When participants label the categories, it’s called an open card sort. If categories are already labeled and provided to participants, it’s a closed card sort. Hybrid card sorts provide existing labeled groups and allow participants to create their own.

Tree Testing

A tree test is a type of usability test on the hierarchy/taxonomy of a navigation structure such as a website or software menu. Participants are asked where they would find content or features in a “tree” (which gets its name because initial tree tests were conducted using the Windows Explorer tree).

Actual Use

Usability testing and its variations (e.g., tree testing and click testing) have participants attempt task scenarios as if they were using the actual interface (simulated use). Using a flight simulator isn’t the same as flying, however, so simulated use methods may miss many of the “real-life” variables that impact outcomes. On the other hand, the disadvantage of actual use methods is that many confounding variables make isolating cause and effect difficult.

Analytics

Software and websites log a substantial amount of information such as clicks, time spent on pages or features, errors, and drop-offs. Software packages such as Google Analytics tell you something happened and can help make some sense of this data, but are less helpful in explaining why it happened.

A/B Testing

In A/B testing, users of websites or software applications are directed to one of two versions (A or B) to see which version (if either) has a higher conversion rate (usually determined through statistical analysis of clicks or purchases). When there are more than two versions, this method is known as a multivariate test.

Observing

Observation in UX research involves watching people use a product without interfering (e.g., employees entering expense reports), either in-person or remotely as they do their work with an existing product (no simulation), to generate ideas about actual or potential problems and/or new feature ideas.

• Contextual Inquiry

A contextual inquiry involves a more structured observation and often a follow-up interview to see how people use a product in-context (when the context is a home, this is called a follow-me-home study).

Mixed Use

While many methods have both a behavioral and attitudinal component (e.g., usability testing and UX benchmarking), most have a dominant method (e.g., usability testing is defined by observing tasks rather than logging answers to post-study questionnaires). A small set of mixed-use UX research methods are more equal combinations of two methods, such as using biometrics during task performance or combining analytics and survey data in true intent studies.

Biometrics

Biometrics includes a family of methods that collect physiological data from participants. In UX research, biometrics are collected during task performance, either simulated or actual.

• Eye-Tracking

Equipment that tracks a person’s pupil location (and dilation) can provide an idea about where people look (or don’t look) as a way to understand how design impacts attention. This can be informative in both simulated (e.g., usability studies) and actual use situations (e.g., driving a car, shopping).

• Facial Coding

Analysis of facial expressions using a rubric to code emotions such as surprise, happiness, delight, and frustration can be used with attitudinal rating scales to assess emotional reactions to designs or experiences (e.g., when unboxing a new product).

• GSR/HR

Galvanic skin response (GSR) or heart rate (HR) (both requiring equipment) can be recorded to measure participants’ physiological reactions to designs (typically interpreted as indicators of emotional response).

True Intent

A true intent study aims to understand why participants are visiting a website and who they are. The method typically involves combining website logs with a survey and are often administered using a website intercept (pop-up) using software like MUIQ.

Attitudinal/Self Report Methods (Saying Things)

Attitudinal/Self Report Methods (Saying Things)

Attitudes affect actions and consequences of actions shape our attitudes. Attitude isn’t a perfect predictor of what will happen but it’s often a good-enough predictor. Self-reported accounts aren’t an error-proof record of what has happened but are often the only realistic way of collecting descriptions of events.

Surveys

Surveys have been a staple of consumer research for a century and play a major role in user research, with most UX researchers using the method regularly. There isn’t a single type of survey, so it can be helpful to break down surveys into categories based on their primary research goal. Table 1 shows seven main types of surveys with examples (not an exhaustive list).

| Survey Type | Examples |

|---|---|

| Attitudes/Experiences and Intention | Loyalty Satisfaction Usability/Usefulness Construct Specific (e.g., delight, clutter, synthetic voice quality) |

| Individual Differences | Product Experience Tech-Savviness |

| Customer Service | Call Experience Post-Purchase |

| Product/Feature Prioritization | MaxDiff Kano Top-Tasks/Jobs to Be Done |

| Marketing Segmentation | Preference Testing Message Effectiveness Customer Segmentation Characteristics of a Market |

| Brand | Awareness Attitudes Lift (pre/post) |

| Employee Attitudes | Job Satisfaction 360 Survey Exit Survey |

Table 1. Main types of surveys with examples

Interviews

A targeted group of users (e.g., customers, stakeholders) can be interviewed in-person or remotely using a structured (following a script/fixed set of questions) or semi/unstructured (following the conversation to where it uncovers insights) format. Interviews are usually conducted 1:1 but can be conducted with groups.

Focus Groups

A focus group is a special type of group interview in which a group of (3-10ish) participants is asked questions by a facilitator for the purpose of generating ideas or getting early feedback on concepts.

Diary Study

In a diary study, participants are asked to note behaviors, attitudes, and experiences over a period of time (days, weeks, or years). Data can be collected in an analog format (a physical notebook) but most are conducted using a digital format (survey-type format with set intervals and ability to add audio/video).

Desirability

A desirability study is a special kind of survey where participants are shown a concept, design, or feature and typically rate them with the Microsoft reaction cards or another set of sentiments.

Concept Testing

Activities in concept testing include presenting a set of images or scenarios and then asking participants their attitudes, intention to use, and preferences when multiple concepts are presented. A variation of this is the five-second test, where participants only view the image or page design for five seconds then provide feedback on salient elements or design problems.

Analytic Methods

Analytic Methods

An alternative to empirical methods is to use analytic methods that, because they are evaluative, typically happen later in the design process when there’s either a proposed or an existing interface to analyze for potential problems. Analytic methods are broadly divided into inspection methods and task analysis.

Inspection Methods

Inspection methods include expert review, heuristic evaluation, cognitive walkthrough, and practical usability rating by experts (PURE).

Expert Review

In an expert review, one or multiple evaluators inspect an interface for potential problems, sometimes with tasks and guidelines but often informally, to catch any obvious issues based on their personal experience or knowledge of the product.

Heuristic Evaluation

A heuristic evaluation involves multiple evaluators examining an interface against a set of principles or design rules called heuristics. Heuristics can be thought of as simple and efficient rules that evaluators use as reminders of potential problem areas. Heuristics are typically derived from an examination of the problems uncovered in usability tests to generate overall principles.

Cognitive Walkthrough

A cognitive walkthrough is similar to a heuristic evaluation but with the emphasis on task scenarios that new users would likely perform with the software or website. An expert in usability principles then meticulously goes through each step, identifying problems users might encounter as they learn to use the interface.

PURE

Practical usability rating by experts (PURE) is a more recent method that combines cognitive walkthroughs with a scoring rubric. Evaluators score each task step on a three-point scale (1: Fine, 2: Flail, or 3: Fail/Bail).

Task Analysis

Task analysis requires decomposing a goal-oriented task (e.g., submitting an expense report) into component steps to understand potential friction points and solutions. Although they can be used together in comprehensive task analysis, these methods are broadly divided into physical and cognitive analysis.

Physical Task Analysis

Although less often used in the development of digital products, physical task analysis can play an important role in evaluating designs for industrial products and services. For example, the early time-and-motion studies to increase efficiency of assembly line workers were strongly focused on analysis of physical tasks.

Cognitive Modeling

Cognitive modeling uses a collection of methods that approximate what’s happening in the minds of users based on principles derived from psychological research. These methods are applied to the prediction of behaviors commonly, but not exclusively, with digital products.

• GOMS

The Goals, Operators, Methods, and Selection Rules (GOMS) method estimates the time it takes a skilled user to complete tasks. Different steps are used to estimate this time.

• Keystroke Level Modeling (KLM)

Keystroke Level Modeling is a simpler instance of GOMS where only four operators are available for each step: keystroke, pointing, homing/drawing, and a mental operation.