Surveys are an essential method for collecting data. But like all research methods, surveys have their limitations. Unless the survey is administered by a facilitator, a respondent has only the survey’s instructions, questions, and response options for guidance.

Surveys are an essential method for collecting data. But like all research methods, surveys have their limitations. Unless the survey is administered by a facilitator, a respondent has only the survey’s instructions, questions, and response options for guidance.

Earlier we wrote about ways to improve the clarity of your questions and certain words to watch for when writing questions. But, despite your best efforts, inevitably some participants will respond to questions with inaccurate answers.

Pilot testing can help resolve many issues that reduce the likelihood of correct answers. In preparing for your pretest, look for these seven things (adapted from Groves et al., 2009). We’ve addressed some of these in previous articles and will cover ways to deal with the others in future articles.

1. Failure to Encode a Memory

Encoding is the process of putting an event into memory. Respondents can’t respond correctly to a question if they never encoded the memory in the first place.

When you booked your AirBnB, how much did the property reviews influence your decision?

How much did the price influence your decision not to purchase the service contract?

Did the free Clorox wipes in the store make you feel safer to shop?

Things happen to us all the time, so we may simply fail to notice the many details that marketers and product designers value. If the memory was never encoded, respondents just won’t be able to remember such details. If required to respond, respondents might pick the most likely answer or just randomly select one to continue.

2. Forgetting

People forget. No surprise there. Participants might encode a memory that they are later unable to retrieve. As time goes, on memories fade. There are four primary culprits for why participants forget:

- Retrieval failure: The more time has passed and the less salient the event, the less it’s recalled.

- Reconstruction: Gaps are filled in with a generic memory.

- Distortion: Our memories of an event are influenced by contemporary details such as photos and videos.

- Mismatched terms: We might not associate memories with terms asked by interviewers or in surveys.

3. Misinterpreting the Question

Respondents may have a perfect memory and an accurate recollection of an event, but they may misunderstand what’s being asked. This is both more common and more preventable than encoding and memory problems. There are at least seven common reasons participants misinterpret your question:

- Grammatical and lexical ambiguity: For example, does “you” mean just you or anyone in your household?

- Excessive complexity: Many embedded clauses, left-embedded syntax, and adjunct “wh” questions can make questions difficult to understand.

- Faulty presuppositions: Questions might include assumptions that are not true.

- Vague concepts: For example, are children under 18, or under 21? Do infants count?

- Vague quantifiers: Words such as near, very, quite, much, most, few, often, and several may be interpreted differently by different respondents.

- Unfamiliar terms: Watch for acronyms (MPG, IRA, 401K) and other terms (assets, deductible) that you may understand, but respondents may not.

- False inferences: Some respondents may read into the intent of a question, and others will read questions more literally.

4. Flawed Judgment or Poor Estimation

Making good judgments takes practice (for some, a lifetime), and not all survey participants will be good at judging or estimating past behavior or future behavior.

How much did you spend on Amazon.com last month?

How likely are you to purchase the product?

Which of the following designs is best?

Respondents may struggle when estimating past and future amounts of expenditures, may have trouble judging design effectiveness, or may be swayed by secondary elements. In studies of long-term dietary recall, Smith (1991) found that people tended to rely on generic knowledge of their diets, remembering with reasonable accuracy how often they ate various foods but being less accurate regarding portion size.

These aren’t fatal flaws, as you can correct poor estimation. For example, using top-box scoring is a good (but not perfect) predictor of behavior from respondent estimation of future intent. Corroboration can also be used to gauge the accuracy of estimates. For example, we once asked participants how much they spent on their recent visits to websites and then verified the actual amount from receipts, finding that participants tended to overestimate the amount spent.

5. Answer Formatting

Respondents can incorrectly answer a question based on how the response options are displayed.

There is a sort of folklore in good and bad response scale formatting that makes it hard to differentiate research-based recommendations from vestiges of decades of practice. We’ve extensively studied response formatting and have usually found little impact of format differences on UX measurement. For example, we’ve found that potential concerns such as left-side bias, acquiescence bias, the number of scale points (beyond three), and even complete labeling have tiny, if any, effects on responses.

In contrast, we’ve found that using too few response options (three or fewer) and alternating item tones does tend to lead to more incorrect responses.

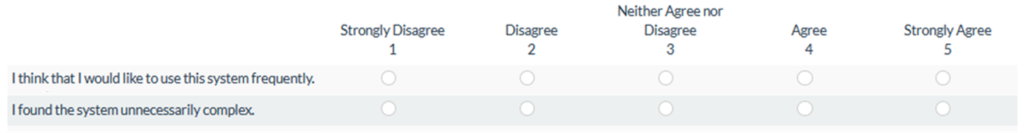

For example, below are two of the ten items from the System Usability Scale (SUS). The item tone is reversed in half of the items in an attempt to control a hypothesized acquiescence bias (tendency to agree rather than disagree with a statement).

Figure 1: Two items from the SUS; the first with a positive tone and the second with a negative tone.

If someone found an experience easy, they’d need to disagree to items that suggested the experience was hard and agree to items that said the experience was easy. We’ve found that some respondents forget to reverse their responses for negative-tone items, providing incorrect information. When we created an all-positive version of the SUS, we found little evidence of the hypothesized acquiescence bias that the alternating-tone approach supposedly avoids.

6. Dishonesty

Respondents either may not feel comfortable providing the information asked or may be trying to game the system. In the latter case, some methods help identify “cheaters” who try to game the system by incorrectly answering screening questions to get the honorarium.

To prevent respondents from feeling uncomfortable, remove sensitive questions unless they’re necessary. Some sensitive questions may be essential in some screeners; for example, questions that ask about income or medical procedures. Permission statements or broad income categories can reduce dishonest answers, but even when these are in place, expect some incorrect information when you ask respondents to divulge sensitive information to strangers.

7. Not Following Instructions

In UX research, a common tenet is that people don’t read manuals. We expect that this applies to directions in surveys also. Even simple instructions can be overlooked as participants quickly scan questions.

Do you have an Amazon Prime account? (Do not include access you might have from family members.)

Overlooking the second statement would lead to people reporting household usage.

Which of the following features are least important?

Not noticing or forgetting to select the least important may lead respondents to select the most important.

Imagine you need to buy a dishwasher to replace an old one. Time is not an issue (shipping time/arrival time). On our website, please find a stainless steel dishwasher under $1000 that you feel is the best, most reliable unit. Copy the full name of the product as you will be asked for it later. Be sure to include the brand (GE, Samsung, LG, Whirlpool, etc.).

It isn’t always possible to avoid such complexity, but when it’s necessary, strive to write and format the instructions to make them as easy to follow as possible.

Summary

People may answer survey questions incorrectly for a variety of reasons:

- Failure to encode a memory: Respondents can’t correctly answer a question if they have never encoded the relevant memory.

- Forgetting: Respondents may have encoded the relevant memory but may be unable to retrieve it accurately due to passage of time or distortion (e.g., generic memory, contemporary details, mismatched terms).

- Misinterpreting the question: Respondents may encode and retrieve the relevant memory but misunderstand the question (e.g., grammatical or lexical ambiguity, excessive complexity, faulty presuppositions, vague concepts, vague quantifiers, unfamiliar terms, false inferences).

- Flawed judgment or poor estimation: Respondents differ in their abilities to make judgments and estimate past or future behavior.

- Answer formatting: Many differences in answer formats don’t substantially affect resulting measurements, but some do (e.g., providing three or fewer response options, alternating item tone).

- Dishonesty: Respondents might feel uncomfortable accurately answering sensitive questions, and in some cases, might answer screening questions dishonestly to get into a survey and secure the honorarium.

- Not following instructions: Participants moving quickly through a survey can overlook even simple instructions, and complex instructions exacerbate this problem.

UX researchers should keep these issues in mind when designing, analyzing, and interpreting surveys.